When you manage a server farm, it is often difficult to have visibility into what is happening there. We can never really know when a user is trying to bypass the security of our system.

Logs (when they exist) are generally buried in the mass and it is difficult to detect abnormal behaviors that could be a sign of an intrusion.

Using a log concentrator like Loki or Elasticsearch is an effective solution to centralize logs and make them more easily exploitable. But this is not enough to detect dangerous actions like creating a reverse shell, writing to a sensitive directory, searching for SSH keys, etc. that do not write logs.

Outside of attacks on exposed services (VPNs, web servers, SSH daemons, etc.), we are completely blind to what is happening on our servers. If a malicious actor gets in via a compromised SSH key, they can roam freely and will be very difficult to detect (SELinux and AppArmor block some attempts, though). This is where Falco comes in.

What is Falco?

Falco is a threat detection engine in your systems. It is particularly suitable for containerized environments (Docker, Kubernetes) but is not limited to them.

This works by monitoring system calls and comparing them to predefined rules. As soon as an abnormal event is detected, Falco sends an alert. These rules are written in YAML and can be refined to match your environment.

In this article, we will see what Falco is in order to be alerted in the event of abnormal events on our servers as well as how to set it up in a Kubernetes environment.

Please note that Falco is a project accepted by the CNCF (Cloud Native Computing Foundation) in the category of graduated projects (at the same level as Cilium, Rook, ArgoCD or Prometheus).

Let’s go and discover Falco from A to Y… with a good cup of coffee! (Z is not achievable for a software in constant evolution).

Installation on a Debian 12 server

Let’s start by installing Falco on a Debian 12 server. To do this, we will go through an official APT repository.

curl -fsSL https://falco.org/repo/falcosecurity-packages.asc | \

sudo gpg --dearmor -o /usr/share/keyrings/falco-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/falco-archive-keyring.gpg] https://download.falco.org/packages/deb stable main" | sudo tee /etc/apt/sources.list.d/falcosecurity.list

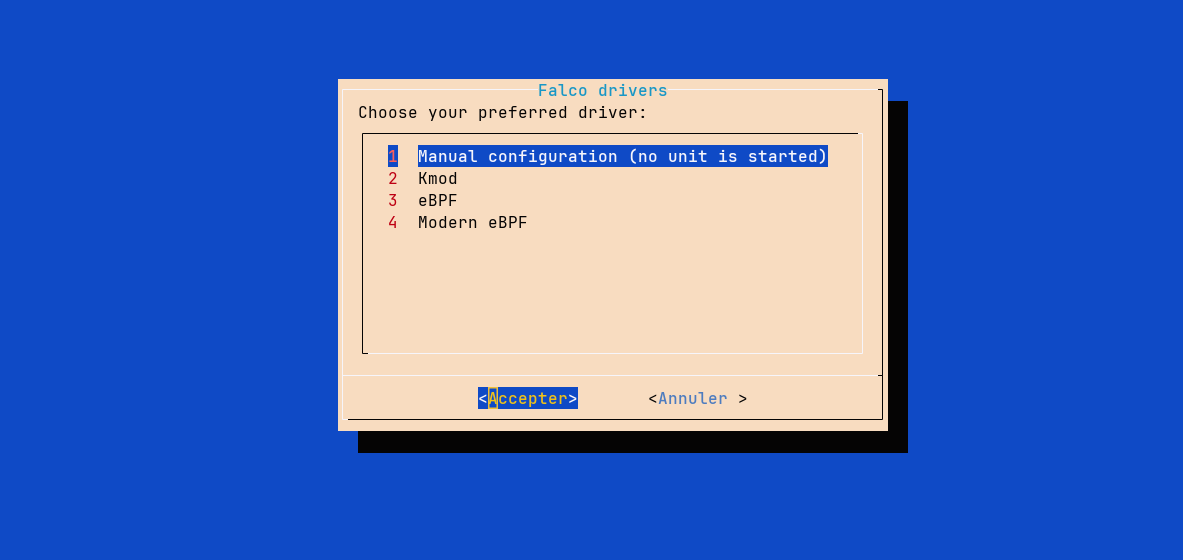

sudo apt-get update && sudo apt-get install -y falcoDuring the installation, you will be asked to choose a “driver”. This refers to the way Falco will monitor system calls and events. You can choose between:

- Kernel module (Legacy)

- eBPF

- Modern eBPF

The Kernel module is to be avoided , it is much less efficient than the eBPF and does not provide any additional functionality. The difference between the eBPF and the Modern eBPF is its implementation. Indeed, the classic eBPF requires that Falco downloads a library containing the instructions that it would compile on the fly in our kernel. This library is specific to the version of the kernel that we are using, forcing us to re-download it each time the kernel is updated. The Modern eBPF module is different, because it follows the BPF CO-RE paradigm (BPF Compile Once, Run Everywhere) . The code to be injected into the kernel is already present in the Falco binary and no external driver is necessary (on the other hand, it requires a minimum kernel 5.8, where the classic eBPF works from kernel 4.14) .

My machine has kernel 6.1.0, so I’ll choose Modern eBPF.

After that, I check that Falco is correctly installed with the command falco --version.

$ falco --version

Thu Apr 04 10:29:22 2024: Falco version: 0.37.1 (x86_64)

Thu Apr 04 10:29:22 2024: Falco initialized with configuration file: /etc/falco/falco.yaml

Thu Apr 04 10:29:22 2024: System info: Linux version 6.1.0-16-amd64 (debian-kernel@lists.debian.org) (gcc-12 (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC Debian 6.1.67-1 (2023-12-12)

{"default_driver_version":"7.0.0+driver","driver_api_version":"8.0.0","driver_schema_version":"2.0.0","engine_version":"31","engine_version_semver":"0.31.0","falco_version":"0.37.1","libs_version":"0.14.3","plugin_api_version":"3.2.0"}I can then start the Falco service corresponding to my driver (in my case falco-bpf.service, the other services being falco-kmod.serviceand falco-bpf.service).

$ systemctl status falco-modern-bpf.service

● falco-modern-bpf.service - Falco: Container Native Runtime Security with modern ebpf

Loaded: loaded (/lib/systemd/system/falco-modern-bpf.service; enabled; preset: enabled)

Active: active (running) since Thu 2024-04-04 10:31:07 CEST; 44s ago

Docs: https://falco.org/docs/

Main PID: 2511 (falco)

Tasks: 9 (limit: 3509)

Memory: 32.8M

CPU: 828ms

CGroup: /system.slice/falco-modern-bpf.service

└─2511 /usr/bin/falco -o engine.kind=modern_ebpf

avril 04 10:31:07 falco-linux falco[2511]: Falco initialized with configuration file: /etc/falco/falco.yaml

avril 04 10:31:07 falco-linux falco[2511]: System info: Linux version 6.1.0-16-amd64 (debian-kernel@lists.debian.org) (gcc-12 (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.4>

avril 04 10:31:07 falco-linux falco[2511]: Loading rules from file /etc/falco/falco_rules.yaml

avril 04 10:31:07 falco-linux falco[2511]: Loading rules from file /etc/falco/falco_rules.local.yaml

avril 04 10:31:07 falco-linux falco[2511]: The chosen syscall buffer dimension is: 8388608 bytes (8 MBs)

avril 04 10:31:07 falco-linux falco[2511]: Starting health webserver with threadiness 4, listening on 0.0.0.0:8765

avril 04 10:31:07 falco-linux falco[2511]: Loaded event sources: syscall

avril 04 10:31:07 falco-linux falco[2511]: Enabled event sources: syscall

avril 04 10:31:07 falco-linux falco[2511]: Opening 'syscall' source with modern BPF probe.

avril 04 10:31:07 falco-linux falco[2511]: One ring buffer every '2' CPUs.We will talk about driver configuration again a little later in a future chapter.

Create a first Falco Rule

For the impatient, here is a first Falco rule which allows to detect writes in binary directories which are not caused by package managers.

By default, the rules are present in the files:

/etc/falco/falco_rules.yaml→ Default rules/etc/falco/falco_rules.local.yaml→ Custom Rules/etc/falco/rules.d/*→ Custom rules per file deposit

So we’re going to create our first rule in /etc/falco/falco_rules.local.yaml.

- macro: bin_dir

condition: (fd.directory in (/bin, /sbin, /usr/bin, /usr/sbin))

- list: package_mgmt_binaries

items: [rpm_binaries, deb_binaries, update-alternat, gem, npm, python_package_managers, sane-utils.post, alternatives, chef-client, apk, snapd]

- macro: package_mgmt_procs

condition: (proc.name in (package_mgmt_binaries))

- rule: Write below binary dir

desc: >

Trying to write to any file below specific binary directories can serve as an auditing rule to track general system changes.

Such rules can be noisy and challenging to interpret, particularly if your system frequently undergoes updates. However, careful

profiling of your environment can transform this rule into an effective rule for detecting unusual behavior associated with system

changes, including compliance-related cases.

condition: >

open_write and evt.dir=<

and bin_dir

and not package_mgmt_procs

output: File below a known binary directory opened for writing (file=%fd.name pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty %container.info)

priority: ERRORWe will see a little later how a rule is composed, but for now, we only admit that this rule allows to detect writes in binary directories which are not caused by package managers.

After creating our rule in the file /etc/falco/falco_rules.local.yaml, Falco should automatically reload the rule (if it doesn’t, we can do it manually via sudo systemctl reload falco-modern-bpf.service).

Now let’s try to trigger the alert we just created. I’ll open a first terminal displaying Falco logs with journalctl -u falco-modern-bpf.service -fand in a second run the command touch /bin/toto.

avril 04 11:18:38 falco-linux falco[2511]: 11:18:38.057651538: Error File below a known binary directory opened for writing (file=/bin/toto pcmdline=bash gparent=sshd evt_type=openat user=root user_uid=0 user_loginuid=0 process=touch proc_exepath=/usr/bin/touch parent=bash command=touch /bin/toto terminal=34816 container_id=host container_name=host)Victory, we have triggered an alert! 🥳

Now it should not trigger if I install a package via apt:

$ apt install -y apache2

$ which apache2

/usr/sbin/apache2The apache2 binary is in /usr/sbin (folder well monitored by our Falco rule) , but no alert has been triggered.

We are already beginning to understand how Falco works. We will be able to go into a little more detail.

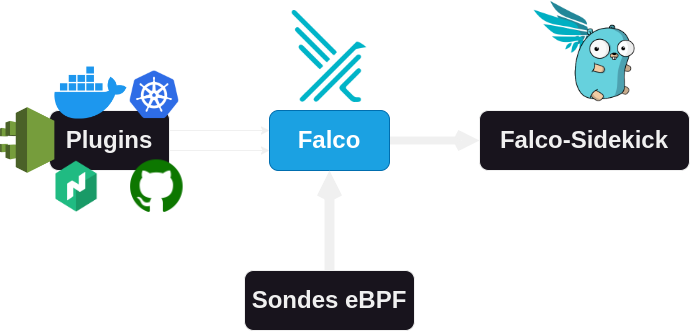

Falco Architecture

Natively, Falco is made to work from eBPF (Extended Berkeley Packet Filter) probes. These probes are programs that are loaded directly into the kernel to warn Falco (user-land side) of what is happening. It is also possible to launch Falco from a module added to the kernel.

Information

What is eBPF?

eBPF, for extended Berkeley Packet Filter , is a technology that allows programs to be executed in a sandbox environment within the Linux kernel without having to change the kernel code or load external modules. eBPF has been available since kernel 3.18 (2014) and has the ability to attach itself to an event. In this way, the injected code is only called when a system call related to our need is executed. This avoids overloading the kernel with unnecessary programs.

System Calls

Falco is an agent that monitors system calls using eBPF or a kernel module (when eBPF is not possible) . Programs theoretically all make system calls to interact with the kernel (essential to read/write a file, make a request, etc.).

To see the system calls of a process, you can use the command strace. This allows you to launch a program and see the system calls that are made.

$ strace echo "P'tit kawa?"

execve("/usr/bin/echo", ["echo", "P'tit kawa?"], 0x7fff2442be98 /* 66 vars */) = 0

brk(NULL) = 0x629292ad2000

arch_prctl(0x3001 /* ARCH_??? */, 0x7fff1aa14580) = -1 EINVAL (Argument invalide)

mmap(NULL, 8192, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7604dfe2e000

access("/etc/ld.so.preload", R_OK) = -1 ENOENT (Aucun fichier ou dossier de ce nom)

openat(AT_FDCWD, "/etc/ld.so.cache", O_RDONLY|O_CLOEXEC) = 3

newfstatat(3, "", {st_mode=S_IFREG|0644, st_size=110843, ...}, AT_EMPTY_PATH) = 0

mmap(NULL, 110843, PROT_READ, MAP_PRIVATE, 3, 0) = 0x7604dfe12000

close(3) = 0

openat(AT_FDCWD, "/lib/x86_64-linux-gnu/libc.so.6", O_RDONLY|O_CLOEXEC) = 3

read(3, "\177ELF\2\1\1\3\0\0\0\0\0\0\0\0\3\0>\0\1\0\0\0P\237\2\0\0\0\0\0"..., 832) = 832

pread64(3, "\6\0\0\0\4\0\0\0@\0\0\0\0\0\0\0@\0\0\0\0\0\0\0@\0\0\0\0\0\0\0"..., 784, 64) = 784

pread64(3, "\4\0\0\0 \0\0\0\5\0\0\0GNU\0\2\0\0\300\4\0\0\0\3\0\0\0\0\0\0\0"..., 48, 848) = 48

pread64(3, "\4\0\0\0\24\0\0\0\3\0\0\0GNU\0\302\211\332Pq\2439\235\350\223\322\257\201\326\243\f"..., 68, 896) = 68

newfstatat(3, "", {st_mode=S_IFREG|0755, st_size=2220400, ...}, AT_EMPTY_PATH) = 0

pread64(3, "\6\0\0\0\4\0\0\0@\0\0\0\0\0\0\0@\0\0\0\0\0\0\0@\0\0\0\0\0\0\0"..., 784, 64) = 784

mmap(NULL, 2264656, PROT_READ, MAP_PRIVATE|MAP_DENYWRITE, 3, 0) = 0x7604dfa00000

mprotect(0x7604dfa28000, 2023424, PROT_NONE) = 0

mmap(0x7604dfa28000, 1658880, PROT_READ|PROT_EXEC, MAP_PRIVATE|MAP_FIXED|MAP_DENYWRITE, 3, 0x28000) = 0x7604dfa28000

mmap(0x7604dfbbd000, 360448, PROT_READ, MAP_PRIVATE|MAP_FIXED|MAP_DENYWRITE, 3, 0x1bd000) = 0x7604dfbbd000

mmap(0x7604dfc16000, 24576, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_FIXED|MAP_DENYWRITE, 3, 0x215000) = 0x7604dfc16000

mmap(0x7604dfc1c000, 52816, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_FIXED|MAP_ANONYMOUS, -1, 0) = 0x7604dfc1c000

close(3) = 0

mmap(NULL, 12288, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x7604dfe0f000

arch_prctl(ARCH_SET_FS, 0x7604dfe0f740) = 0

set_tid_address(0x7604dfe0fa10) = 12767

set_robust_list(0x7604dfe0fa20, 24) = 0

rseq(0x7604dfe100e0, 0x20, 0, 0x53053053) = 0

mprotect(0x7604dfc16000, 16384, PROT_READ) = 0

mprotect(0x6292924c8000, 4096, PROT_READ) = 0

mprotect(0x7604dfe68000, 8192, PROT_READ) = 0

prlimit64(0, RLIMIT_STACK, NULL, {rlim_cur=8192*1024, rlim_max=RLIM64_INFINITY}) = 0

munmap(0x7604dfe12000, 110843) = 0

getrandom("\xe1\x15\x54\xd3\xf5\xa1\x30\x4d", 8, GRND_NONBLOCK) = 8

brk(NULL) = 0x629292ad2000

brk(0x629292af3000) = 0x629292af3000

openat(AT_FDCWD, "/usr/lib/locale/locale-archive", O_RDONLY|O_CLOEXEC) = 3

newfstatat(3, "", {st_mode=S_IFREG|0644, st_size=15751120, ...}, AT_EMPTY_PATH) = 0

mmap(NULL, 15751120, PROT_READ, MAP_PRIVATE, 3, 0) = 0x7604dea00000

close(3) = 0

newfstatat(1, "", {st_mode=S_IFCHR|0620, st_rdev=makedev(0x88, 0x3), ...}, AT_EMPTY_PATH) = 0

write(1, "P'tit kawa?\n", 12P'tit kawa?

) = 12

close(1) = 0

close(2) = 0

exit_group(0) = ?

+++ exited with 0 +++It is precisely these system calls that Falco will monitor to detect potential anomalies.

But why not just do that

straceand thus not have to go through the eBPF?

straceis not a viable solution for several reasons, including that it cannot monitor multiple processes at once. Furthermore, it works the opposite of what we want to do: it allows us to see the system calls of a monitored process, but not to react to the system calls to alert of a malicious process.

Sysdig (the company behind Falco) also offers an eponymous tool to visualize and record system calls to a file to facilitate the creation of Falco rules.

VERSION="0.36.0"

wget https://github.com/draios/sysdig/releases/download/${VERSION}/sysdig-${VERSION}-x86_64.deb

dpkg -i sysdig-${VERSION}-x86_64.debI set the version to 0.36.0, so that you have the same results as me. But I of course invite you to use the latest version available.

Using the command sysdig proc.name=chmodwe visualize the system calls related to this command.

153642 15:18:57.468174047 1 chmod (72012.72012) < execve res=0 exe=chmod args=777.README.md. tid=72012(chmod) pid=72012(chmod) ptid=71438(bash) cwd=<NA> fdlimit=1024 pgft_maj=0 pgft_min=33 vm_size=432 vm_rss=4 vm_swap=0 comm=chmod cgroups=cpuset=/user.slice.cpu=/user.slice/user-0.slice/session-106.scope.cpuacct=/.i... env=SHELL=/bin/bash.LESS= -R.PWD=/root.LOGNAME=root.XDG_SESSION_TYPE=tty.LS_OPTIO... tty=34823 pgid=72012(chmod) loginuid=0(root) flags=1(EXE_WRITABLE) cap_inheritable=0 cap_permitted=1FFFFFFFFFF cap_effective=1FFFFFFFFFF exe_ino=654358 exe_ino_ctime=2023-12-29 16:08:00.974303000 exe_ino_mtime=2022-09-20 17:27:27.000000000 uid=0(root) trusted_exepath=/usr/bin/chmod

153643 15:18:57.468204086 1 chmod (72012.72012) > brk addr=0

153644 15:18:57.468205471 1 chmod (72012.72012) < brk res=55B917192000 vm_size=432 vm_rss=4 vm_swap=0

153645 15:18:57.468270944 1 chmod (72012.72012) > mmap addr=0 length=8192 prot=3(PROT_READ|PROT_WRITE) flags=10(MAP_PRIVATE|MAP_ANONYMOUS) fd=-1(EPERM) offset=0We will reuse this tool in a future chapter to create Falco rules.

Be alerted in the event of an event

It’s all well and good to be aware of an intrusion, but if no one is there to react it’s not much use!

This is why we are going to install a second application: Falco Sidekick .

Its role is to receive alerts from Falco and redirect them to external tools (Mail, Alertmanager, Slack, etc.). To install it, we can directly download the binary and create a systemd service (ideally, it should be deployed on an isolated machine to prevent an attacker from disabling it).

VER="2.28.0"

wget -c https://github.com/falcosecurity/falcosidekick/releases/download/${VER}/falcosidekick_${VER}_linux_amd64.tar.gz -O - | tar -xz

chmod +x falcosidekick

sudo mv falcosidekick /usr/local/bin/

sudo touch /etc/systemd/system/falcosidekick.service

sudo chmod 664 /etc/systemd/system/falcosidekick.serviceWe edit the file /etc/systemd/system/falcosidekick.serviceto add the following content:

[Unit]

Description=Falcosidekick

After=network.target

StartLimitIntervalSec=0

[Service]

Type=simple

Restart=always

RestartSec=1

ExecStart=/usr/local/bin/falcosidekick -c /etc/falcosidekick/config.yaml

[Install]

WantedBy=multi-user.targetBut before launching it, we need to create the configuration file /etc/falcosidekick/config.yaml.

I manage my alerts with Alertmanager and I want to continue using it for Falco alerts because it allows me to route notifications to Gotify or Email depending on their priority.

debug: false

alertmanager:

hostport: "http://192.168.1.89:9093"To start the service, we can go through systemctl.

systemctl daemon-reload

systemctl enable --now falcosidekickNow we need to ask our Falco agent to connect to our Falco-Sidekick. To do this, I edit the configuration in /etc/falco/falco.yamlto enable http output to Falco-Sidekick.

Here are the values to edit:

json_output: true

json_include_output_property: true

http_output:

enabled: true

url: http://192.168.1.105:2801/ # IP:port de Falco-SidekickI restart Falco-Sidekick and create a first test alert:

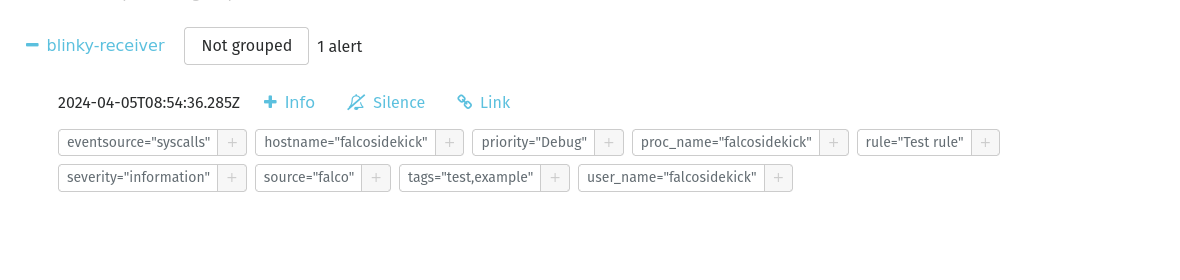

curl -sI -XPOST http://192.168.1.105:2801/test

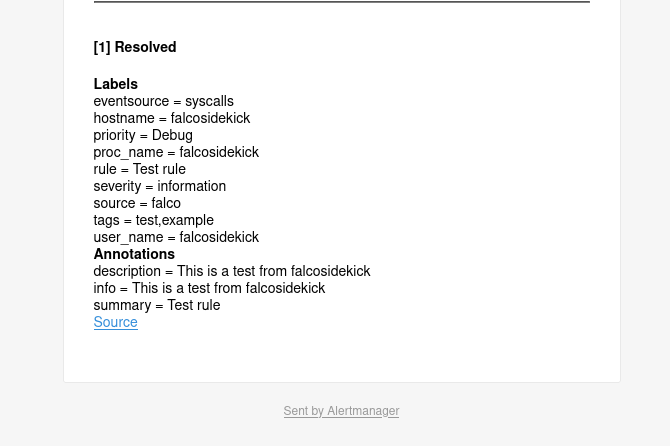

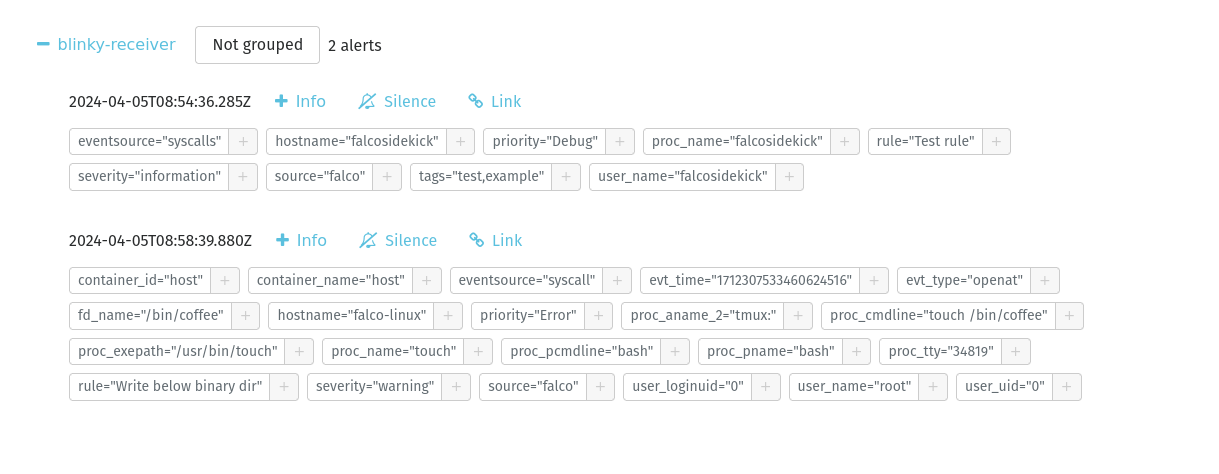

I receive this test alert on my alertmanager and even by email (thanks to the integration of an SMTP in my alertmanager) :

Now let’s try to trigger the alert from the Write below binary dir rule we created earlier.

It touch /bin/coffee is well reported directly to my Alertmanager!

We also visualize details such as the process that initiated the system call, touch ( bashhaving created this process, itself called by tmux) .

To monitor Falco-Sidekick you can use the following endpoints:

/ping– Returns “pong” in plain text/healthz– Returns{ 'status': 'ok' }/metrics– Export prometheus

Web interface for Falco

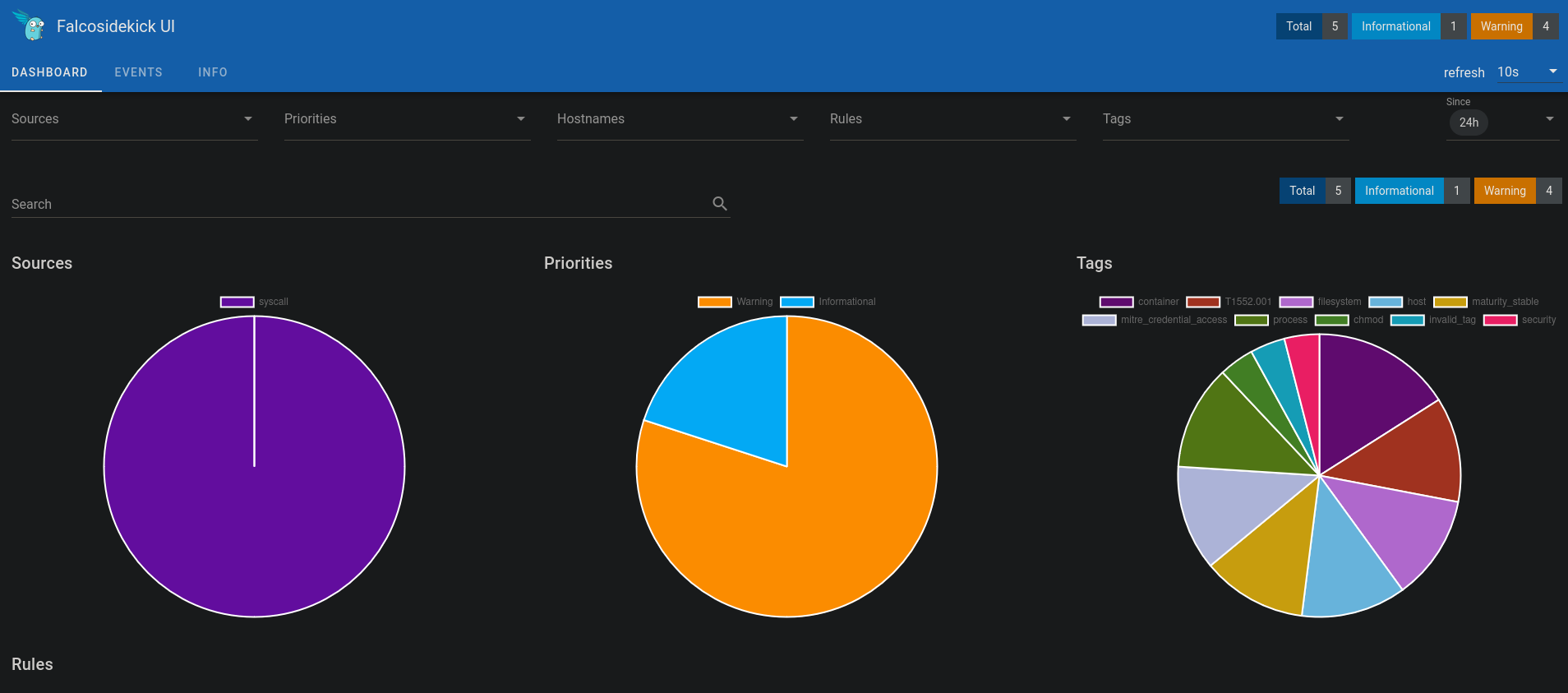

Having coupled Falco-Sidekick with AlertManager to be notified in case of incident is a good thing, but if you want to make it simpler and have a web interface to consult the alerts, it is possible to use Falcosidekick UI .

Falco-Sidekick UI is a web interface that will retrieve alerts from Falco-Sidekick. This one is based on a Redis database that will store the alerts to restore them in a dashboard.

We can install it with Docker containers as follows:

version: '3'

services:

falco-sidekick-ui:

image: falcosecurity/falcosidekick-ui:2.3.0-rc2

restart: always

ports:

- "2802:2802"

environment:

- FALCOSIDEKICK_UI_REDIS_URL=redis:6379

- FALCOSIDEKICK_UI_USER=weare:coffeelovers

depends_on:

- redis

redis:

image: redis/redis-stack:7.2.0-v9Once the images are downloaded, we can start the containers with them docker-compose up -dand authenticate on the web interface with the credentials weare:coffeelovers.

Next I need to tell Falco-Sidekick to route alerts to Falco-Sidekick UI . To do this I edit my configuration file /etc/falcosidekick/config.yaml:

debug: false

alertmanager:

hostport: "http://192.168.1.89:9093"

webui:

url: "http://192.168.1.105:2802"With this new configuration, the web interface and AlertManager receive the events.

After a few alerts are triggered, my interface fills up a bit.

I can then sort the alerts by type, rule, priority, etc.

I can thus return to previous alerts to note recurrences on the monitored machines.

Information

In the current case, there is no persistence of data in the Redis database. If you restart the container, you will lose all previous alerts. If Falco-Sidekick UI is used in production, a persistent database should be implemented.

Now that we have what we need to visualize alerts, we will see how to create Falco rules.

Falco Rules

A Falco rule is composed of several elements: a name, a description, a condition, an output, a priority and tags.

Here is an example of a Falco rule:

- rule: Program run with disallowed http proxy env

desc: >

Detect curl or wget usage with HTTP_PROXY environment variable. Attackers can manipulate the HTTP_PROXY variable's

value to redirect application's internal HTTP requests. This could expose sensitive information like authentication

keys and private data.

condition: >

spawned_process

and (proc.name in (http_proxy_binaries))

and ( proc.env icontains HTTP_PROXY OR proc.env icontains HTTPS_PROXY )

output: Curl or wget run with disallowed HTTP_PROXY environment variable (env=%proc.env evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty exe_flags=%evt.arg.flags %container.info)

priority: NOTICE

tags: [maturity_incubating, host, container, users, mitre_execution, T1204]The name, description, tags and priority will be displayed in the suspicious event report. It is advisable to take care of the content of these fields to make it easier to read alerts from an external tool.

If the mention proc.env icontains HTTP_PROXYis rather easy to understand. What about spawned_processor http_proxy_binaries?

Let’s first start by explaining the fields we use to contextualize an alert.

The fields

Fields are the variables used in Falco rules. They differ depending on the event (the system call) we want to monitor. There are several classes of fields, for example:

evt: Generic information of the event (evt.time,evt.type,evt.dir).proc: Process information (proc.name,proc.cmdline,proc.tty).user/group: The information of the user who triggered the event (user.name,group.gid).fd: Information about open files and connections (fd.ip,fd.name).container: Information about the Docker container or Kubernetes pod (container.id,container.name,container.image).k8s: Information about Kubernetes objects (k8s.ns.name,k8s.pod.name).

Defining a field to monitor always starts with a system call (starting with evt) before contextualizing with other fields (like the process name, the user who executed it or the open file).

To see the complete list of fields, you can consult the official documentation or directly with the command falco --list=syscall.

Operators

Conditions can be used in Falco rules.

| Operators | Description |

|---|---|

=,!= | Equality and inequality operators. |

<=, <, >=,> | Comparison operators for numeric values. |

contains,icontains | For strings, returns “true” if a string contains another string, and icontainsis the case-insensitive version. For flags, returns “true” if the flag is set. Examples: proc.cmdline contains "-jar", evt.arg.flags contains O_TRUNC. |

dwith,endswith | Checks the prefix or suffix of strings. |

glob | Evaluates standard glob patterns. Example: fd.name glob "/home/*/.ssh/*". |

in | Evaluates whether the given set (which may have only one element) is entirely contained in another set. Example: (b,c,d) in (a,b,c)returns FAUXsince dis not contained in the compared set (a,b,c). |

intersects | Evaluates whether the provided set (which may have only one element) has at least one element in common with another set. Example: (b,c,d) intersects (a,b,c)returns VRAIsince both sets contain band c. |

pmatch | (Prefix Match) Compares a file path to a set of file or directory prefixes. Example: fd.name pmatch (/tmp/hello)returns true against /tmp/hello, /tmp/hello/worldbut not against /tmp/hello_world. |

exists | Checks if a field is defined. Example: k8s.pod.name exists. |

bcontains,bstartswith | (Binary contains) These operators work similarly to containsand startswithand allow to perform a byte match against a raw string of bytes, accepting as input a hexadecimal string. Examples: evt.buffer bcontains CAFE, evt.buffer bstartswith CAFE_. |

So, to compare the process name, we can use the following conditions: proc.name = sshdor proc.name contains sshd.

When a part of the condition does not have an operator, it means that it is a macro, let’s see what it is.

Macros and Lists

Macros are “variables” that can be used in Falco rules to make the rules easier to read and maintain, or to reuse frequently used conditions.

For example, rather than repeating the condition proc.name in (rpm, dpkg, apt, yum)in all 10 rules that have it, we can create a macro package_mgmt_procsand a list package_mgmt_binariesthat contains the names of the package management processes.

- list: package_mgmt_binaries

items: [rpm_binaries, deb_binaries, update-alternat, gem, npm, python_package_managers, sane-utils.post, alternatives, chef-client, apk, snapd]

- macro: package_mgmt_procs

condition: (proc.name in (package_mgmt_binaries))So in the following rule we can simply use package_mgmt_procsto check if the process is a package manager. This will return true if the process is indeed one.

- rule: Write below binary dir

desc: >

Trying to write to any file below specific binary directories can serve as an auditing rule to track general system changes.

Such rules can be noisy and challenging to interpret, particularly if your system frequently undergoes updates. However, careful

profiling of your environment can transform this rule into an effective rule for detecting unusual behavior associated with system

changes, including compliance-related cases.

condition: >

open_write and evt.dir=<

and bin_dir

and not package_mgmt_procs

output: File below a known binary directory opened for writing (file=%fd.name pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty %container.info)

priority: ERRORWe get a very simple rule to read with code that can be reused in other contexts.

The above rule comes from the official Falco documentation. But knowing the fields and operators, we can easily create our own rules.

In the following example, I want to detect if a user tries to search for sensitive files (like SSH keys or Kubernetes configuration files) .

- list: searching_binaries

items: ['grep', 'fgrep', 'egrep', 'rgrep', 'locate', 'find']

- rule: search for sensitives files

desc: Detect if someone is searching for a sensitive file

condition: >

spawned_process and proc.name in (searching_binaries) and

(

proc.args contains "id_rsa" or

proc.args contains "id_ed25519" or

proc.args contains "kube/config"

)

output: Someone is searching for a sensitive file (file=%proc.args pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty)

priority: INFO

tags: [sensitive_data, ssh]Write a rule

To write a rule as we did just before ( search for sensitive files ), it is possible to do it blindly as we did (by writing, then testing) , but Falco maintainers suggest going through sysdig. We will see the interest of this method.

Sysdig provides a feature to record system calls to a file and use this recording with the same syntax of Falco rules to see if an event is indeed detected.

Before we begin, we need to know what event we want to react to. For this, some manpagescan help us to know which system calls are used by a program.

In a first terminal, we will start recording system calls to a file.

I’m going to do the action I want to monitor (in my case I want to warn of a ) chmod 777. In the ( ) page manI see that the system call used is and .chmodman 2 chmodfchmodatfchmod

So I’m going to record the system calls fchmodat, fchmodand chmodin a file dumpfile.scap.

sysdig -w dumpfile.scap "evt.type in (fchmod,chmod,fchmodat)"In a second terminal, I will run the command chmod 777 /tmp/test.

chmod 777 /tmp/testI stop logging system calls with Ctrl+C. I can use sysdig to read this file and see what system calls were logged in it.

$ sysdig -r dumpfile.scap

1546 16:21:59.800433848 1 <NA> (-1.76565) > fchmodat

1547 16:21:59.800449701 1 <NA> (-1.76565) < fchmodat res=0 dirfd=-100(AT_FDCWD) filename=/tmp/test mode=0777(S_IXOTH|S_IWOTH|S_IROTH|S_IXGRP|S_IWGRP|S_IRGRP|S_IXUSR|S_IWUSR|S_IRUSR)Our chmodhas been successfully registered.

I can now replay this recording by adding conditions to create my Falco rule reacting to this event. After some tests, I arrive at the following rule:

$ sysdig -r ~/dumpfile.scap "evt.type in (fchmod,chmod,fchmodat) and (evt.arg.mode contains S_IXOTH and evt.arg.mode contains S_IWOTH and evt.arg.mode contains S_IROTH and evt.arg.mode contains S_IXGRP and evt.arg.mode contains S_IWGRP and evt.arg.mode contains S_IRGRP and evt.arg.mode contains S_IXUSR and evt.arg.mode contains S_IWUSR and evt.arg.mode contains S_IRUSR)"

1547 16:21:59.800449701 1 <NA> (-1.76565) < fchmodat res=0 dirfd=-100(AT_FDCWD) filename=/tmp/test mode=0777(S_IXOTH|S_IWOTH|S_IROTH|S_IXGRP|S_IWGRP|S_IRGRP|S_IXUSR|S_IWUSR|S_IRUSR)All I have to do now is create the Falco rule from my command sysdig.

- macro: chmod_777

condition: (evt.arg.mode contains S_IXOTH and evt.arg.mode contains S_IWOTH and evt.arg.mode contains S_IROTH and evt.arg.mode contains S_IXGRP and evt.arg.mode contains S_IWGRP and evt.arg.mode contains S_IRGRP and evt.arg.mode contains S_IXUSR and evt.arg.mode contains S_IWUSR and evt.arg.mode contains S_IRUSR)

- rule: chmod 777

desc: Detect if someone is trying to chmod 777 a file

condition: >

evt.type in (fchmod,chmod,fchmodat) and chmod_777

output: Someone is trying to chmod 777 a file (file=%fd.name pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty)

priority: NOTICE

tags: [chmod, security]Override and exceptions

A complex case to handle is exceptions. For example, I discover that a specific user needs to search for sensitive files in a maintenance script.

The rule search for sensitives fileswill react to all processes findlooking for SSH keys. However, I want my user to be able to do this without triggering an alert.

- list: searching_binaries

items: ['grep', 'fgrep', 'egrep', 'rgrep', 'locate', 'find']

- rule: search for sensitives files

desc: Detect if someone is searching for a sensitive file

condition: >

spawned_process and proc.name in (searching_binaries) and

(

proc.args contains "id_rsa" or

proc.args contains "id_ed25519" or

proc.args contains "kube/config"

)

output: Someone is searching for a sensitive file (file=%proc.args pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty)

priority: INFO

tags: [sensitive_data, ssh]I can do this in two ways:

- Add a patch in the search for sensitive files rule to add an additional condition.

- Add an exception in the search for sensitive files rule to ignore alerts for a specific user.

Why not change the condition to exclude this case?

I have X machines with the same Falco rules (we will see how to synchronize the rules on the machines below) , I want to have as few differences as possible between the machines. I then prefer to add a patch file that will be exclusive to this machine.

I will then add a file /etc/falco/rules.d/patch-rules.yamlwith the following content:

- rule: search for sensitives files

condition: and user.name != "mngmt"

override:

condition: appendTrick

It is also possible to do overrideon lists and macros:

- list: searching_binaries

items: ['grep', 'fgrep', 'egrep', 'rgrep', 'locate', 'find']

- list: searching_binaries

items: ['rg', 'hgrep', 'ugrep']

override:

items: appendWe just used a overrideto add a condition to our rule. But overridealso allows to replace an entire condition of a rule. For example:

- rule: search for sensitives files

condition: >

spawned_process and proc.name in (searching_binaries) and

(

proc.args contains "id_rsa" or

proc.args contains "id_ed25519" or

proc.args contains "kube/config"

) and user.name != "mngmt"

override:

condition: replaceThe downside of this method is that it can become complicated to manage if you have a lot of rules and overrides in multiple files. It’s easy to get lost.

Rather than adding an override to modify a condition to exclude a context, it is possible to go through exceptions .

- rule: search for sensitives files

desc: Detect if someone is searching for a sensitive file

condition: >

spawned_process and proc.name in (searching_binaries) and

(

proc.args contains "id_rsa" or

proc.args contains "id_ed25519" or

proc.args contains "kube/config"

)

exceptions:

- name: ssh_script

fields: user.name

values: [mngmt]

output: Someone is searching for a sensitive file (file=%proc.args pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%p

roc.pname command=%proc.cmdline terminal=%proc.tty container_id=%container.id container_image=%container.image.repository container_image_tag=%container.image.tag container_name=%container.name)

priority: INFO

tags: [sensitive_data, ssh]This writing is cleaner and allows me to add many exceptions without having to modify the main rule.

I can also refine my exception by adding other fields to be more precise.

- rule: search for sensitives files

desc: Detect if someone is searching for a sensitive file

condition: >

spawned_process and proc.name in (searching_binaries) and

(

proc.args contains "id_rsa" or

proc.args contains "id_ed25519" or

proc.args contains "kube/config"

)

exceptions:

- name: context_1

fields: [user.name, proc.cwd, user.shell]

values:

- [mngmt, /home/mngmt/, /bin/sh]

output: Someone is currently searching for a sensitive file (%proc.cwd file=%proc.args pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%pr

oc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty container_id=%container.id container_image=%container.image.repository container_image_tag=%container.image.tag container_name=%container.name)

priority: INFO

tags: [sensitive_data, ssh]Using exceptions allows you to avoid having a “condition” field that is too long and too difficult to maintain.

For the example, I used a fairly simple case but it is extremely dangerous to let a user search for sensitive files. I invite you to reconsider the way you manage access to these files rather than creating an exception in Falco .

Falco and the containers

Falco integrates very well with containers and is able to detect events that occur inside them.

This way, we can monitor started containers using the class values container (e.g., container.id, container.name, container.image) .

- macro: container_started

condition: ((evt.type = container) or (spawned_process and proc.vpid=1))

- rule: New container with tag latest

desc: Detect if a new container with the tag "latest" is started

condition: >

container_started and container.image.tag="latest"

output: A new container with the tag "latest" is started (container_id=%container.id container_image=%container.image.repository container_image_tag=%container.image.tag container_name=%container.name k8s_ns=%k8s.ns.name k8s_pod_name=%k8s.pod.name)

priority: INFO

tags: [container, invalid_tag]Existing rules are already adapted to work in containers (like shell detection rules, sensitive file search rules, etc.) . But you have to add the fields container.id, container.image, container.namein the output to have information on the container concerned when an alert is triggered.

Small clarification: Without the use of container_started, the rule would not monitor any system calls and Falco would be forced to monitor each system call to check if it is a container with the tag latest, which poses major performance problems as specified in the Falco rules chapter .

For example, let’s reuse the rule search for sensitives filesto add the fields container.id, container.imageand container.namein the output.

- rule: search for sensitives files

desc: Detect if someone is searching for a sensitive file

condition: >

spawned_process and proc.name in (searching_binaries) and

(

proc.args contains "id_rsa" or

proc.args contains "id_ed25519" or

proc.args contains "kube/config"

)

output: Someone is searching for a sensitive file (file=%proc.args pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty container_id=%container.id container_image=%container.image.repository container_image_tag=%container.image.tag container_name=%container.name)

priority: INFO

tags: [sensitive_data, ssh]When an alert is triggered, information about the affected container is obtained (like ID, name, image, etc.) .

{

"hostname": "falco-linux",

"output": "22:50:25.195959798: Informational Someone is searching for a sensitive file (file=-r id_rsa pcmdline=ash gparent=containerd-shim evt_type=execve user=root user_uid=0 user_loginuid=-1 process=grep proc_exepath=/bin/busybox parent=ash command=grep -r id_rsa terminal=34816 container_id=b578c3492ecf container_image=alpine container_image_tag=latest container_name=sharp_lovelace)",

"priority": "Informational",

"rule": "search for sensitives files",

"source": "syscall",

"tags": [

"sensitive_data",

"ssh"

],

"time": "2024-04-06T20:50:25.195959798Z",

"output_fields": {

"container.id": "b578c3492ecf",

"container.image.repository": "alpine",

"container.image.tag": "latest",

"container.name": "sharp_lovelace",

"evt.time": 1712436625195959800,

"evt.type": "execve",

"proc.aname[2]": "containerd-shim",

"proc.args": "-r id_rsa",

"proc.cmdline": "grep -r id_rsa",

"proc.exepath": "/bin/busybox",

"proc.name": "grep",

"proc.pcmdline": "ash",

"proc.pname": "ash",

"proc.tty": 34816,

"user.loginuid": -1,

"user.name": "root",

"user.uid": 0

}

}To define whether or not a rule should apply to containers, it is possible to add the following conditions:

- The condition

container.id=hostfor those who should not apply to it. - The condition

container.id!=hostfor those that must apply only to containers.

Without these conditions, the rules will apply to all processes, including those not in containers.

xz flaw (CVE-2024-3094)

Let’s talk news! A flaw has been discovered in the library liblzmathat allows a specific attacker to bypass SSHD authentication using a private ssh key. This flaw is referenced as CVE-2024-3094 and is critical.

Sysdig has published a rule to detect if the liblzmafaulty library is loaded by SSHD. Here it is:

- rule: Backdoored library loaded into SSHD (CVE-2024-3094)

desc: A version of the liblzma library was seen loading which was backdoored by a malicious user in order to bypass SSHD authentication.

condition: open_read and proc.name=sshd and (fd.name endswith "liblzma.so.5.6.0" or fd.name endswith "liblzma.so.5.6.1")

output: SSHD Loaded a vulnerable library (| file=%fd.name | proc.pname=%proc.pname gparent=%proc.aname[2] ggparent=%proc.aname[3] gggparent=%proc.aname[4] image=%container.image.repository | proc.cmdline=%proc.cmdline | container.name=%container.name | proc.cwd=%proc.cwd proc.pcmdline=%proc.pcmdline user.name=%user.name user.loginuid=%user.loginuid user.uid=%user.uid user.loginname=%user.loginname image=%container.image.repository | container.id=%container.id | container_name=%container.name| proc.cwd=%proc.cwd )

priority: WARNING

tags: [host,container]If I add this famous library liblzma (version 5.6.0) in the folder /lib/x86_64-linux-gnu/and start the service sshdfrom it, Falco should detect the alert…

{

"hostname": "falco-linux",

"output": "23:11:24.780959791: Warning SSHD Loaded a vulnerable library (| file=/lib/x86_64-linux-gnu/liblzma.so.5.6.0 | proc.pname=systemd gparent=<NA> ggparent=<NA> gggparent=<NA> image=<NA> | proc.cmdline=sshd -D | container.name=host | proc.cwd=/ proc.pcmdline=systemd install user.name=root user.loginuid=-1 user.uid=0 user.loginname=<NA> image=<NA> | container.id=host | container_name=host| proc.cwd=/ )",

"priority": "Warning",

"rule": "Backdoored library loaded into SSHD (CVE-2024-3094)",

"source": "syscall",

"tags": [

"container",

"host"

],

"time": "2024-04-06T21:11:24.780959791Z",

"output_fields": {

"container.id": "host",

"container.image.repository": null,

"container.name": "host",

"evt.time": 1712437884780959700,

"fd.name": "/lib/x86_64-linux-gnu/liblzma.so.5.6.0",

"proc.aname[2]": null,

"proc.aname[3]": null,

"proc.aname[4]": null,

"proc.cmdline": "sshd -D",

"proc.cwd": "/",

"proc.pcmdline": "systemd install",

"proc.pname": "systemd",

"user.loginname": "<NA>",

"user.loginuid": -1,

"user.name": "root",

"user.uid": 0

}

}Bingo! Falco has detected the alert and we can react accordingly to correct this flaw.

The driver-loader on Falco

When I first installed Falco, I could see that it needed to load a ‘ driver ‘. This is essential for Falco if you are using the kmod(kernel module) or simple mode ebpf. The mode modern_ebpfis exempt from drivers.

If however you use a mode requiring a driver, you will see in the pod logs (in a Kubernetes context) that the driver is downloaded and installed in one emptyDirto be mounted in the Falco pod.

$ kubectl logs -l app.kubernetes.io/name=falco -n falco -c falco-driver-loader

2024-04-17 21:49:01 INFO Removing eBPF probe symlink

└ path: /root/.falco/falco-bpf.o

2024-04-17 21:49:01 INFO Trying to download a driver.

└ url: https://download.falco.org/driver/7.0.0%2Bdriver/x86_64/falco_debian_6.1.76-1-cloud-amd64_1.o

2024-04-17 21:49:01 INFO Driver downloaded.

└ path: /root/.falco/7.0.0+driver/x86_64/falco_debian_6.1.76-1-cloud-amd64_1.o

2024-04-17 21:49:01 INFO Symlinking eBPF probe

├ src: /root/.falco/7.0.0+driver/x86_64/falco_debian_6.1.76-1-cloud-amd64_1.o

└ dest: /root/.falco/falco-bpf.o

2024-04-17 21:49:01 INFO eBPF probe symlinkedOutside Kubernetes, if we start Falco without a driver, we will get an error:

$ falco -o engine.kind=ebpf

Fri Apr 19 08:20:07 2024: Falco version: 0.37.1 (x86_64)

Fri Apr 19 08:20:07 2024: Loading rules from file /etc/falco/falco_rules.yaml

Fri Apr 19 08:20:07 2024: Loading rules from file /etc/falco/falco_rules.local.yaml

Fri Apr 19 08:20:07 2024: Loaded event sources: syscall

Fri Apr 19 08:20:07 2024: Enabled event sources: syscall

Fri Apr 19 08:20:07 2024: Opening 'syscall' source with BPF probe. BPF probe path: /root/.falco/falco-bpf.o

Fri Apr 19 08:20:07 2024: An error occurred in an event source, forcing termination...

Events detected: 0

Rule counts by severity:

Triggered rules by rule name:

Error: can't open BPF probe '/root/.falco/falco-bpf.o'On Kubernetes, an initContainer is automatically started to download the driver. Outside of a cluster, you must download this driver via the command falcoctl driver install --type ebpf.

I don’t like this setup too much. What would happen if the driver was corrupted by a hacker? Or if the download server was unavailable while I’m adding new machines to my infrastructure? The ideal is to have a fully airgap operation (without external connection to the cluster) for Falco.

It is possible to create a Docker image of Falco with the driver already integrated, but this can cause maintenance problems (the image will have to be updated with each new version of Falco, and it will not be compatible with all kernel versions) .

One solution proposed by Sysdig is to have your own web server to host the Falco drivers and download them from this same server (this can even be a pod accompanied by a Kubernetes service).

To specify a different download URL, you need to set the environment variable FALCOCTL_DRIVER_REPOSto the pod falco-driver-loader.

In the Helm chart, this is done by the key driver.loader.initContainer.env:

driver:

kind: ebpf

loader:

initContainer:

env:

- name: FALCOCTL_DRIVER_REPOS

value: "http://driver-httpd/"Falco on Kubernetes

I wasn’t going to leave you without showing you how to install Falco on a Kubernetes cluster! 😄

In addition to being container-friendly, Falco rules are already ready to use for Kubernetes. From a cluster, we have access to new variables to create our rules (or use them in outputs):

k8s.ns.name– Name of the Kubernetes namespace.k8s.pod.name– Name of the Kubernetes pod.k8s.pod.label/k8s.pod.labels– Kubernetes pod labels.k8s.pod.cni.json– Kubernetes pod CNI information.

Note that since Falco monitors system calls, Falco rules installed by the Helm chart are also valid on hosts (outside pods).

helm repo add falcosecurity https://falcosecurity.github.io/charts

helm install falco falcosecurity/falco -n falco -f values.yaml --create-namespacetty: true

falcosidekick:

enabled: true

webui:

enabled: true

# Vous pouvez ajouter des configurations supplémentaires pour Falco-Sidekick pour router les notifications comme ci-dessous

# slack:

# webhookurl: https://hooks.slack.com/services/XXXXX/XXXXX/XXXXX

# channel: "#falco-alerts"

driver:

kind: modern_ebpfWhen you want to add a rule, the ‘simple’ method is to do it in the values.yamlHelm chart and update the deployment via helm upgrade --reuse-values -n falco falco falcosecurity/falco -f falco-rules.yaml. As soon as the field customRulesis present, the Helm chart will create a ConfigMapwith the custom rules, you can also use it to modify the rules.

customRules:

restrict_tag.yaml: |

- macro: container_started

condition: >

((evt.type = container or

(spawned_process and proc.vpid=1)))

- list: namespace_system

items: [kube-system, cilium-system]

- macro: namespace_allowed_to_use_latest_tag

condition: not (k8s.ns.name in (namespace_system))

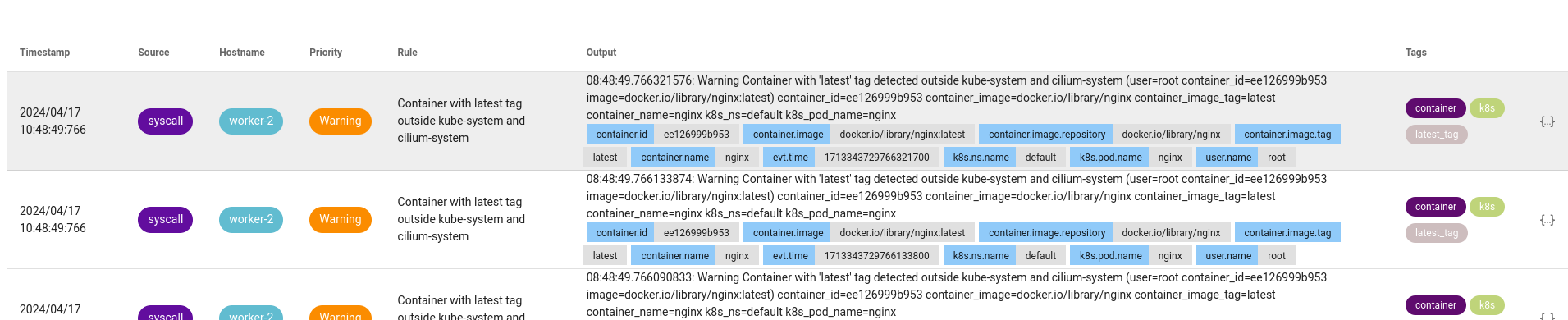

- rule: Container with latest tag outside kube-system and cilium-system

desc: Detects containers with the "latest" tag started in namespaces other than kube-system and cilium-system

condition: >

container_started

and container.image endswith "latest"

and namespace_allowed_to_use_latest_tag

output: "Container with 'latest' tag detected outside kube-system and cilium-system (user=%user.name container_id=%container.id image=%container.image)"

priority: WARNING

tags: [k8s, container, latest_tag]Note: I wrote the rule reacting to a container with the tag latestto show that it was possible to react to pod metadata, but it would be better to leave this function to a Kyverno or an Admission Policy

For those who are not satisfied with this, we will see below how to automate the addition of rules from external artifacts (and without updating our Helm deployment to add new rules).

Trick

There is no requirement that you have only one file values.yamlcontaining the configuration AND the Falco rules. You can very well separate the two for clarity.

helm install falco falcosecurity/falco -n falco -f values.yml -f falco-rules.yaml --create-namespaceNow if I start a container with the image nginx:latestin the namespace default:

kubectl run nginx --image=nginx:latest -n default

Falco successfully detected the alert and notified me that the container nginxwith the image nginx:latestwas started in the namespace default.

Detection, alert and response

For now, the big difference between Falco and its competitor on Kubernetes: it is the fact of being able to react to an alert raised by a pod. Falco can only detect and alert, but cannot react (like for example, kill the pod or put it in quarantine) .

For this, there is a tool called Falco Talon , developed by a Falco maintainer.

It is an alert-reactive tool that works in the same way as Falco-Sidekick (it is even possible to use them together). Upon receiving an alert, Falco Talon will act with the aim of blocking the attack.

⚠️ Attention, the program is still under development, it is possible that you will have differences with what I will show you. ⚠️

Here are the possible actions with Falco Talon:

- Kill the pod (

kubernetes:terminate) - Add / Remove a label (

kubernetes:labelize) - Create a NetworkPolicy (

kubernetes:networkpolicy) - Run a command/script in the pod (

kubernetes:execandkubernetes:script) - Delete a resource (

kubernetes:delete) (other than pod) - Block outbound traffic (

calico:networkpolicy) (requires Calico)

Each action is configurable and nothing prevents you from having the same action several times with different parameters.

To install it, a Helm chart is available on the Github repository (an index will of course be available later):

git clone https://github.com/Falco-Talon/falco-talon.git

cd falco-talon/deployment/helm/

helm install falco-talon . -n falco --create-namespaceOnce Talon is installed, we need to configure Falco-Sidekick to send alerts to Talon. To do this, we need to modify our values.yamlto add the following configuration:

falcosidekick:

enabled: true

config:

webhook:

address: "http://falco-talon:2803"And there you have it! Falco-Sidekick will send the alerts to Falco Talon which will be able to react accordingly. It’s up to us to configure it so that it reacts as we wish to our alerts.

The files to be modified are directly present in the Github repository (in the Helm chart) at the location ./deployment/helm/rules.yamland ./deployment/helm/rules_overrides.yaml.

Here is an excerpt from Talon’s default configuration (in the file rules.yaml):

- action: Labelize Pod as Suspicious

actionner: kubernetes:labelize

parameters:

labels:

suspicious: true

- rule: Terminal shell in container

match:

rules:

- Terminal shell in container

output_fields:

- k8s.ns.name!=kube-system, k8s.ns.name!=falco

actions:

- action: Labelize Pod as SuspiciousThis creates the action Labelize Pod as Suspiciousthat will add a label suspicious: trueto the pod when a terminal is detected in a container. This action will only apply if the alert Terminal shell in containeris triggered and this alert has the field k8s.ns.namedifferent from kube-systemand falco.

Simple, right? 😄

To test this, let’s start an nginx pod:

$ kubectl run nginx --image=nginx:latest -n default

pod/nginx created

$ kubectl get pods nginx --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 10s run=nginxNo label suspiciousis present. But if I start a terminal in the pod nginx…

$ kubectl exec pod/nginx -it -- bash

root@nginx:/# id

uid=0(root) gid=0(root) groups=0(root)

root@nginx:/#

exit

$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 16m run=nginx,suspicious=trueA label suspicioushas been added to the pod nginx! 😄

We can also remove a label so that a service no longer points to that pod.

Another concern is that as long as the pod is running, the attacker can continue his attack, download payloads, access other services, etc. A solution would then be to add a NetworkPolicyto block outgoing traffic from the pod.

Here is my new rules file:

- action: Disable outbound connections

actionner: kubernetes:networkpolicy

parameters:

allow:

- 127.0.0.1/32

- action: Labelize Pod as Suspicious

actionner: kubernetes:labelize

parameters:

labels:

app: ""

suspicious: true

- rule: Terminal shell in container

match:

rules:

- Terminal shell in container

output_fields:

- k8s.ns.name!=kube-system, k8s.ns.name!=falco

actions:

- action: Disable outbound connections

- action: Labelize Pod as SuspiciousWhat do you think will happen if I launch a terminal in a pod?

We will see this right away by Deploymentcreating a pod netshoot(image used for network tests).

apiVersion: apps/v1

kind: Deployment

metadata:

name: netshoot

spec:

replicas: 1

selector:

matchLabels:

app: netshoot

template:

metadata:

labels:

app: netshoot

spec:

containers:

- name: netshoot

image: nicolaka/netshoot

command: ["/bin/bash"]

args: ["-c", "while true; do sleep 60;done"]Natively (without any NetworkPolicy), the pod netshootcan access the internet, or other services in the cluster. But once I apply my rule and start a terminal in the pod netshoot, outbound traffic is blocked, the label suspiciousis added to the pod, and the label appis removed.

As we remove the label app=netshoot, the Deployment will recreate a new pod to respect the number of replicas.

$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

netshoot-789557564b-8gc7m 1/1 Running 0 13m app=netshoot,pod-template-hash=789557564b

$ kubectl exec deploy/netshoot -it -- bash

netshoot-789557564b-8gc7m:~# ping -c1 1.1.1.1

PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data.

--- 1.1.1.1 ping statistics ---

1 packets transmitted, 0 received, 100% packet loss, time 0ms

netshoot-789557564b-8gc7m:~# curl http://kubernetes.default.svc.cluster.local:443 # Aucune réponse

netshoot-789557564b-8gc7m:~# exit

$ kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

netshoot-789557564b-8gc7m 1/1 Running 0 14m pod-template-hash=789557564b,suspicious=true

netshoot-789557564b-grg8k 1/1 Running 0 54s app=netshoot,pod-template-hash=789557564bThis way, one can easily isolate quarantined pods and block all outgoing traffic without impacting the Deployment.

The networkpolicy created by Talon will use the label suspicious=trueas a selector. However, once we delete the pod, the NetworkPolicyis still there. So we will have to delete it manually.

In addition to being notified by Falco-Sidekick when an alert occurs, Talon can also send us a short message to let us know of the action taken.

By default, Talon will generate eventsin the alert namespace. To see them, simply run the command: kubectl get events --sort-by=.metadata.creationTimestamp.

24m Normal falco-talon:kubernetes:networkpolicy:success pod Status: success...

24m Normal falco-talon:kubernetes:labelize:success pod Status: success...But it is possible to configure Talon to send messages to a webhook, a Slack channel, an SMTP, a query in Loki, etc.

In my case I will configure it to send me a message on a webhook, I will update my values.yamlFalco Talon with the following values:

defaultNotifiers:

- webhook

- k8sevents

notifiers:

webhook:

url: "https://webhook.site/045451d8-ab16-45d9-a65e-7d1858f8c5b7"

http_method: "POST"I update my Helm chart with the command helm upgrade --reuse-values -n falco falco-talon . -f values.yaml. And as soon as Talon has performed an action, I will receive a message on my webhook.

For example, to take the case where Talon deploys a NetworkPolicyto block outgoing traffic from a pod, I will receive a message like this:

{

"objects": {

"Namespace": "default",

"Networkpolicy": "netshoot-789557564b-lwgfs",

"Pod": "netshoot-789557564b-lwgfs"

},

"trace_id": "51ac05de-f97c-45c9-9ac7-cb6f93062f8a",

"rule": "Terminal shell in container",

"event": "A shell was spawned in a container with an attached terminal (evt_type=execve user=root user_uid=0 user_loginuid=-1 process=bash proc_exepath=/bin/bash parent=containerd-shim command=bash terminal=34816 exe_flags=EXE_WRITABLE container_id=0a1197d327ff container_image=docker.io/nicolaka/netshoot container_image_tag=latest container_name=netshoot k8s_ns=default k8s_pod_name=netshoot-789557564b-lwgfs)",

"message": "action",

"output": "the networkpolicy 'netshoot-789557564b-lwgfs' in the namespace 'default' has been updated",

"actionner": "kubernetes:networkpolicy",

"action": "Disable outbound connections",

"status": "success"

}GitOps and Falco

Since my article on ArgoCD in which I talked to you about GitOps and X-as-Code, you may have guessed that I’m going to talk to you about managing Falco rules ‘as code’. And you’re right!

Let’s see how to manage our Falco configuration in “Pull” mode from Falco.

If I have an infrastructure of ~100 machines, I’m not going to have fun connecting to each of them to modify or update the Falco rules machine by machine.

This is why Falco has a tool named falcoctlthat allows you to retrieve the Falco configuration (plugins, rules, etc.) from an external server (it is also from this same tool that you can download the drivers). For this, Falco uses OCI Artifacts to install the rules and plugins.

$ falcoctl artifact list

INDEX ARTIFACT TYPE REGISTRY REPOSITORY

falcosecurity application-rules rulesfile ghcr.io falcosecurity/rules/application-rules

falcosecurity cloudtrail plugin ghcr.io falcosecurity/plugins/plugin/cloudtrail

falcosecurity cloudtrail-rules rulesfile ghcr.io falcosecurity/plugins/ruleset/cloudtrail

falcosecurity dummy plugin ghcr.io falcosecurity/plugins/plugin/dummy

falcosecurity dummy_c plugin ghcr.io falcosecurity/plugins/plugin/dummy_c

falcosecurity falco-incubating-rules rulesfile ghcr.io falcosecurity/rules/falco-incubating-rules

falcosecurity falco-rules rulesfile ghcr.io falcosecurity/rules/falco-rules

falcosecurity falco-sandbox-rules rulesfile ghcr.io falcosecurity/rules/falco-sandbox-rules

# ...When installing Falco, the package already contains default rules (those in the artifact falco-rules), it is then possible to add a set of rules in this way:

$ falcoctl artifact install falco-incubating-rules

2024-04-17 18:36:11 INFO Resolving dependencies ...

2024-04-17 18:36:12 INFO Installing artifacts refs: [ghcr.io/falcosecurity/rules/falco-incubating-rules:latest]

2024-04-17 18:36:12 INFO Preparing to pull artifact ref: ghcr.io/falcosecurity/rules/falco-incubating-rules:latest

2024-04-17 18:36:12 INFO Pulling layer d306556e1c90

2024-04-17 18:36:13 INFO Pulling layer 93a62ab52683

2024-04-17 18:36:13 INFO Pulling layer 5e734f96181c

2024-04-17 18:36:13 INFO Verifying signature for artifact

└ digest: ghcr.io/falcosecurity/rules/falco-incubating-rules@sha256:5e734f96181cda9fc34e4cc6a1808030c319610e926ab165857a7829d297c321

2024-04-17 18:36:13 INFO Signature successfully verified!

2024-04-17 18:36:13 INFO Extracting and installing artifact type: rulesfile file: falco-incubating_rules.yaml.tar.gz

2024-04-17 18:36:13 INFO Artifact successfully installed

├ name: ghcr.io/falcosecurity/rules/falco-incubating-rules:latest

├ type: rulesfile

├ digest: sha256:5e734f96181cda9fc34e4cc6a1808030c319610e926ab165857a7829d297c321

└ directory: /etc/falcoAs soon as the command is executed, falcoctlwill download the rules from the OCI image ghcr.io/falcosecurity/rules/falco-incubating-rules:latestand install them in the folder /etc/falco (in this case, here it is the file /etc/falco/falco-incubating_rules.yamlthat is created) .

On the other hand, for a sustainable infrastructure, I’m not going to have fun typing bash commands every time I want to install new rules. So let’s take a look at the configuration file of falcoctl(which should have been generated when you first ran falcoctl), this one is located at /etc/falcoctl/config.yaml.

artifact:

follow:

every: 6h0m0s

falcoversions: http://localhost:8765/versions

refs:

- falco-rules:0

driver:

type: modern_ebpf

name: falco

repos:

- https://download.falco.org/driver

version: 7.0.0+driver

hostroot: /

indexes:

- name: falcosecurity

url: https://falcosecurity.github.io/falcoctl/index.yamlFirst, we will focus on the followconfiguration part. This allows you to track Falco rule updates and install them automatically.

To continue with the previous example, rather than installing the rules one-off falco-incubating-rules, we will add them to the configuration falcoctlso that they are installed automatically via the commandfalcoctl artifact follow

For this, we will add the rules falco-incubating-rulesin the configuration falcoctl:

artifact:

follow:

every: 6h0m0s

falcoversions: http://localhost:8765/versions

refs:

- falco-incubating-rules

# ...Then the command falcoctl artifact followwill install the rules falco-incubating-rulesand automatically update them every six hours if a new version is available on the tag latest(by comparing the digest of the artifact).

falcoctl artifact follow

2024-04-17 18:50:06 INFO Creating follower artifact: falco-incubating-rules:latest check every: 6h0m0s

2024-04-17 18:50:06 INFO Starting follower artifact: ghcr.io/falcosecurity/rules/falco-incubating-rules:latest

2024-04-17 18:50:06 INFO Found new artifact version followerName: ghcr.io/falcosecurity/rules/falco-incubating-rules:latest tag: latest

2024-04-17 18:50:10 INFO Artifact correctly installed

├ followerName: ghcr.io/falcosecurity/rules/falco-incubating-rules:latest

├ artifactName: ghcr.io/falcosecurity/rules/falco-incubating-rules:latest

├ type: rulesfile

├ digest: sha256:d4c03e000273a0168ee3d9b3dfb2174e667b93c9bfedf399b298ed70f37d623b

└ directory: /etc/falcoInformation

Quick reminder: falcowill automatically reload the rules when a file configured in the /etc/falco/falco.yamlis modified.

Note that by default, falcoctlwill use the tag latestfor OCI images, but I strongly recommend you to specify a specific version (eg: 1.0.0). To know the available versions of an artifact, we have the command falcoctl artifact info. For example:

$ falcoctl artifact info falco-incubating-rules

REF TAGS

ghcr.io/falcosecurity/rules/falco-incubating-rules 2.0.0-rc1, sha256-8b8dd8ee8eec6b0ba23b6a7bc3926a48aaa8e56dc42837a0ad067988fdb19e16.sig, 2.0.0, 2.0, 2, latest, sha256-1391a1df4aa230239cff3efc7e0754dbf6ebfa905bef5acadf8cdfc154fc1557.sig, 3.0.0-rc1, sha256-de9eb3f8525675dc0ffd679955635aa1a8f19f4dea6c9a5d98ceeafb7a665170.sig, 3.0.0, 3.0, 3, sha256-555347ba5f7043f0ca21a5a752581fb45050a706cd0fb45aabef82375591bc87.sig, 3.0.1, sha256-5e734f96181cda9fc34e4cc6a1808030c319610e926ab165857a7829d297c321.sigCreate your own artifacts

We have seen how to use the artifacts falcoctloffered by the registry falcosecurity, now it’s up to us to create our own artifacts!

I will start by creating a file never-chmod-777.yamlcontaining a Falco rule:

- macro: chmod_777

condition: (evt.arg.mode contains S_IXOTH and evt.arg.mode contains S_IWOTH and evt.arg.mode contains S_IROTH and evt.arg.mode contains S_IXGRP and evt.arg.mode contains S_IWGRP and evt.arg.mode contains S_IRGRP and evt.arg.mode contains S_IXUSR and evt.arg.mode contains S_IWUSR and evt.arg.mode contains S_IRUSR)

- rule: chmod 777

desc: Detect if someone is trying to chmod 777 a file

condition: >

evt.type=fchmodat and chmod_777

output: Someone is trying to chmod 777 a file (file=%fd.name pcmdline=%proc.pcmdline gparent=%proc.aname[2] evt_type=%evt.type user=%user.name user_uid=%user.uid user_loginuid=%user.loginuid process=%proc.name proc_exepath=%proc.exepath parent=%proc.pname command=%proc.cmdline terminal=%proc.tty)

priority: ERROR

tags: [chmod, security]To package this file into an OCI artifact, we will start by authenticating to a Docker registry (here, Github Container Registry):

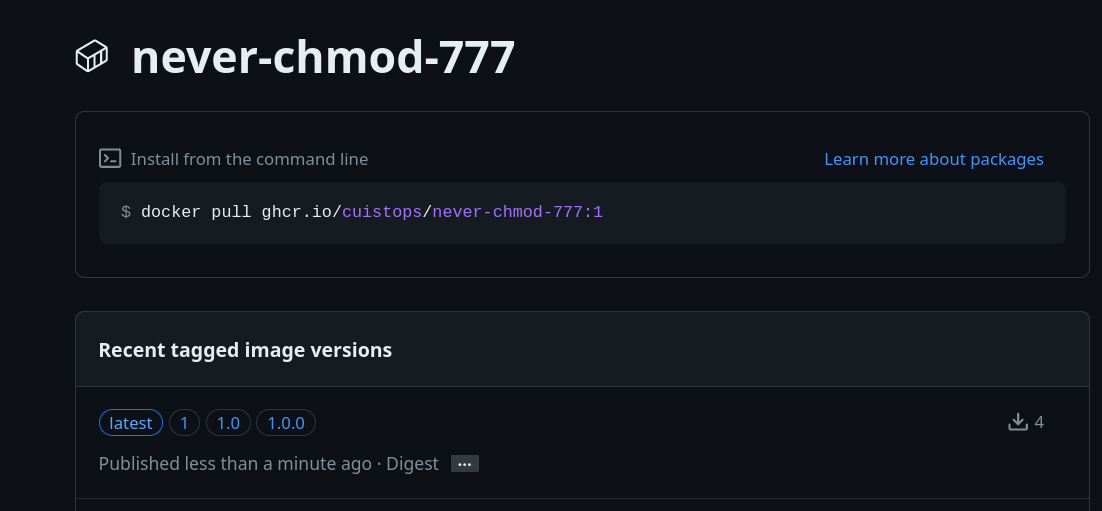

docker login ghcr.ioTo create our image, no docker build, we can do it from falcoctl:

$ falcoctl registry push --type rulesfile \

--version 1.0.0 \

ghcr.io/cuistops/never-chmod-777:1.0.0 \

never-chmod-777.yaml

2024-04-17 19:03:10 INFO Preparing to push artifact name: ghcr.io/cuistops/never-chmod-777:1.0.0 type: rulesfile

2024-04-17 19:03:10 INFO Pushing layer ac9ec4319805

2024-04-17 19:03:11 INFO Pushing layer a449a3b9a393

2024-04-17 19:03:12 INFO Pushing layer 9fa17441da69

2024-04-17 19:03:12 INFO Artifact pushed

├ name: ghcr.io/cuistops/never-chmod-777:1.0.0

├ type:

└ digest: sha256:9fa17441da69ec590f3d9c0a58c957646d55060ffa2deae84d99b513a5041e6dThere you have it! We have our OCI image ghcr.io/cuistops/never-chmod-777:1.0.0containing our Falco rule. All that remains is to add it to the configuration falcoctl:

artifact:

follow:

every: 6h0m0s

falcoversions: http://localhost:8765/versions

refs:

- falco-rules:3

- ghcr.io/cuistops/never-chmod-777:1.0.0Automatically, falcoctl artifact followwill create the file /etc/falco/never-chmod-777.yamlwith my rule.

$ falcoctl artifact follow

2024-04-17 19:07:29 INFO Creating follower artifact: falco-rules:3 check every: 6h0m0s

2024-04-17 19:07:29 INFO Creating follower artifact: ghcr.io/cuistops/never-chmod-777:1.0.0 check every: 6h0m0s

2024-04-17 19:07:29 INFO Starting follower artifact: ghcr.io/falcosecurity/rules/falco-rules:3

2024-04-17 19:07:29 INFO Starting follower artifact: ghcr.io/cuistops/never-chmod-777:1.0.0

2024-04-17 19:07:30 INFO Found new artifact version followerName: ghcr.io/falcosecurity/rules/falco-rules:3 tag: latest

2024-04-17 19:07:30 INFO Found new artifact version followerName: ghcr.io/cuistops/never-chmod-777:1.0.0 tag: 1.0.0

2024-04-17 19:07:33 INFO Artifact correctly installed

├ followerName: ghcr.io/cuistops/never-chmod-777:1.0.0

├ artifactName: ghcr.io/cuistops/never-chmod-777:1.0.0

├ type: rulesfile

├ digest: sha256:9fa17441da69ec590f3d9c0a58c957646d55060ffa2deae84d99b513a5041e6d

└ directory: /etc/falco

2024-04-17 19:07:33 INFO Artifact correctly installed

├ followerName: ghcr.io/falcosecurity/rules/falco-rules:3

├ artifactName: ghcr.io/falcosecurity/rules/falco-rules:3

├ type: rulesfile

├ digest: sha256:d4c03e000273a0168ee3d9b3dfb2174e667b93c9bfedf399b298ed70f37d623b

└ directory: /etc/falcoIn a Kubernetes cluster, you need to specify the artifacts to track in the values.yamlHelm chart at falcoctl.config.artifacts.follow.refs:

falcoctl:

config:

artifacts:

follow:

refs:

- falco-rules:3

- ghcr.io/cuistops/never-chmod-777:1.0.0Note that when creating an artifact with falcoctl, it will copy the path as an argument to the command falcoctl registry pushand drop the file at the same path, taking /etc/falcoroot. This means that if I give it the path ./rulartéfacter-chmod-777.yamlwhen generating my artifact, the file will be dropped in /etc/falco/rules.d/never-chmod-777.yaml. It is therefore necessary to ensure that the path is correctly created and/or that falcoit is correctly configured to read the rules at this location.

As a reminder, Falco will automatically reload its configuration at the locations:

/etc/falco/falco_rules.yaml/etc/falco/rules.d/*/etc/falco/falco_rules.local.yaml

If I drop a rule file to the location /etc/falco/never-chmod-777.yaml, Falco will (by default) not read it. A viable solution is to drop the rules in the folder /etc/falco/rules.d(present in Falco’s configuration), but this is not created by default.

On Kubernetes, I added an initContainer to create the directory rules.din the volume rulesfiles-install-dirand I ask to falcoctldeposit the rules in this directory (knowing that the volume rulesfiles-install-diris mounted in /etc/falco).

extra:

initContainers:

- name: create-rulesd-dir

image: busybox

command: ["mkdir", "-p", "/etc/falco/rules.d"]

volumeMounts:

- name: rulesfiles-install-dir

mountPath: /etc/falco

falcoctl:

artifact:

install:

args: ["--log-format=json", "--rulesfiles-dir", "/rulesfiles/rules.d/"]

follow:

args: ["--log-format=json", "--rulesfiles-dir", "/rulesfiles/rules.d/"]

config:

artifact:

follow:

refs:

- falco-rules:3

- ghcr.io/cuistops/never-chmod-777:1.0.0With this operation, my artifacts are directly installed in a folder monitored by Falco and I do not need to modify its configuration to specify the new rules files.

Github Action to generate the artifacts

To automate the generation of artifacts, we can use Github Actions (or Gitlab CI, Jenkins, etc.). Here is an example workflow to generate OCI images from a Github repository:

name: Generate Falco Artifacts

on:

push:

env:

OCI_REGISTRY: ghcr.io

jobs:

build-image:

name: Build OCI Image

runs-on: ubuntu-latest

permissions:

contents: read

packages: write

id-token: write

strategy:

matrix:

include:

- rule_file: config/rules/never-chmod-777.yaml

name: never-chmod-777

version: 1.0.0

steps:

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@f95db51fddba0c2d1ec667646a06c2ce06100226 # v3.0.0

- name: Log into registry ${{ env.OCI_REGISTRY }}

if: github.event_name != 'pull_request'

uses: docker/login-action@343f7c4344506bcbf9b4de18042ae17996df046d # v3.0.0

with:

registry: ${{ env.OCI_REGISTRY }}

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Checkout Falcoctl Repo

uses: actions/checkout@v3

with:

repository: falcosecurity/falcoctl

ref: main

path: tools/falcoctl

- name: Setup Golang

uses: actions/setup-go@v4

with:

go-version: '^1.20'

cache-dependency-path: tools/falcoctl/go.sum

- name: Build falcoctl

run: make

working-directory: tools/falcoctl

- name: Install falcoctl in /usr/local/bin

run: |

mv tools/falcoctl/falcoctl /usr/local/bin

- name: Checkout Rules Repo

uses: actions/checkout@v3

- name: force owner name to lowercase # Obligatoire pour les organisations / utilisateurs ayant des majuscules dans leur nom Github (ce qui est mon cas...)

run: |

owner=$(echo $reponame | cut -d'/' -f1 | tr '[:upper:]' '[:lower:]')

echo "owner=$owner" >>${GITHUB_ENV}

env:

reponame: '${{ github.repository }}'

- name: Upload OCI artifacts

run: |

cp ${rule_file} $(basename ${rule_file})

falcoctl registry push \

--config /dev/null \

--type rulesfile \

--version ${version} \

${OCI_REGISTRY}/${owner}/${name}:${version} $(basename ${rule_file})

env:

version: ${{ matrix.version }}

rule_file: ${{ matrix.rule_file }}

name: ${{ matrix.name }}To add a Falco rule, simply create the file in the repository and indicate the path, name and version in the workflow matrix.

jobs:

strategy:

matrix:

include:

- rule_file: config/rules/never-chmod-777.yaml

name: never-chmod-777

version: 1.0.0

- rule_file: config/rules/search-for-aws-credentials.yaml

name: search-for-aws-credentials

version: 0.1.1I also share with you Thomas Labarussias ‘ method which consists of taking advantage of the semver notation to create multi-tag images (eg: 1.0.0, 1.0, 1, latest):

- name: Upload OCI artifacts

run: |

MAJOR=$(echo ${version} | cut -f1 -d".")

MINOR=$(echo ${version} | cut -f1,2 -d".")

cp ${rule_file} $(basename ${rule_file})

falcoctl registry push \

--config /dev/null \

--type rulesfile \

--version ${version} \

--tag latest --tag ${MAJOR} --tag ${MINOR} --tag ${version}\

${OCI_REGISTRY}/${owner}/${name}:${version} $(basename ${rule_file})

env:

version: ${{ matrix.version }}

rule_file: ${{ matrix.rule_file }}

name: ${{ matrix.name }}So, the version 1.0.0will have the tag latest, 1.0, 1and 1.0.0. In this way, I can specify in my configuration falcoctlthe tag 1.0that will be equivalent to the latest version of the semver branch: 1.0.x. (same for 1which will be equivalent to 1.x.x).

Create your own artifact index

Now let’s move on to creating our own indexer to reference our OCI images from falcoctl. For this, we need an HTTP server that will expose a YAML file containing the OCI image information.

To do this, I will simply expose my index.yamlcontainer information of my OCI image ghcr.io/cuistops/never-chmod-777:1.0.0in a Github repository.

- name: cuistops-never-chmod-777

type: rulesfile

registry: ghcr.io

repository: cuistops/never-chmod-777

description: Never use 'chmod 777' or 'chmod a+rwx'

home: https://github.com/CuistOps/falco

keywords:

- never-chmod-777

license: apache-2.0

maintainers:

- email: quentinj@une-pause-cafe.fr

name: Quentin JOLY

sources:

- https://raw.githubusercontent.com/CuistOps/falco/main/config/rules/never-chmod-777.yamlThis YAML file is publicly available via this link . I will then add it as an index on falcoctl.

$ falcoctl index add cuistops-security https://raw.githubusercontent.com/CuistOps/falco/main/config/index/index.yaml

2024-04-17 20:32:28 INFO Adding index name: cuistops-security path: https://raw.githubusercontent.com/CuistOps/falco/main/config/index/index.yaml

2024-04-17 20:32:28 INFO Index successfully addedI can see the artifacts available in my indexer cuistops-security:

$ falcoctl artifact list --index cuistops-security

INDEX ARTIFACT TYPE REGISTRY REPOSITORY

cuistops-security cuistops-never-chmod-777 rulesfile ghcr.io cuistops/never-chmod-777I add the followverse to my rules by specifying only the name of the package in my index.yaml, here is my complete configuration file falcoctl:

artifact:

follow:

every: 6h0m0s

falcoversions: http://localhost:8765/versions

refs:

- cuistops-never-chmod-777:1.0.0

driver:

type: modern_ebpf

name: falco

repos:

- https://download.falco.org/driver

version: 7.0.0+driver

hostroot: /

indexes:

- name: cuistops-security

url: https://raw.githubusercontent.com/CuistOps/falco/main/config/index/index.yaml

backend: "https"As soon as I run the command falcoctl artifact follow, the rules never-chmod-777are installed automatically.

$ falcoctl artifact follow

2024-04-17 20:57:56 INFO Creating follower artifact: cuistops-never-chmod-777:1.0.0 check every: 6h0m0s

2024-04-17 20:57:56 INFO Starting follower artifact: ghcr.io/cuistops/never-chmod-777:1.0.0

2024-04-17 20:57:57 INFO Found new artifact version followerName: ghcr.io/cuistops/never-chmod-777:1.0.0 tag: 1.0.0

2024-04-17 20:58:00 INFO Artifact correctly installed

├ followerName: ghcr.io/cuistops/never-chmod-777:1.0.0

├ artifactName: ghcr.io/cuistops/never-chmod-777:1.0.0

├ type: rulesfile

├ digest: sha256:9fa17441da69ec590f3d9c0a58c957646d55060ffa2deae84d99b513a5041e6d

└ directory: /etc/falcoWarning

During my experiments, I encountered a small problem with the default configuration of falcoctl. The registry falcosecuritywas not working properly and was not recognized.

$ falcoctl index list

NAME URL ADDED UPDATEDThis is because of an error in the configuration indexes( /etc/falcoctl/config.yaml). Indeed, the field backendis mandatory and must be filled in.

indexes:

- name: falcosecurity

url: https://falcosecurity.github.io/falcoctl/index.yaml

backend: "https" # <--- Ajouter cette ligneI proposed a small PR to fix this problem, but until it is resolved (in the next release, the v0.8.0), you may have the same incident.

In Falco’s Helm chart, it is possible to specify which artifact indexers to follow in the values.yaml:

falcoctl:

config:

indexes:

- name: cuistops-security

url: https://raw.githubusercontent.com/CuistOps/falco/main/config/index/index.yaml

backend: "https"Conclusion

Falco is a very interesting product that convinced me with its ease of use and flexibility.

If you want to delve deeper into the subject, I also invite you to consult the talk Reacting in time to threats in Kubernetes with Falco (Rachid Zarouali, Thomas Labarussias) .

Thank you for reading this article, I hope it was useful to you and made you want to try Falco.