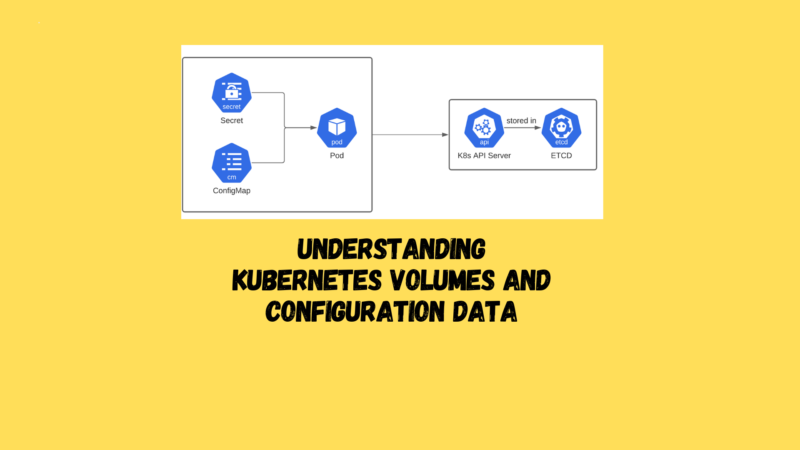

In Kubernetes, a volume is essentially a directory that all containers within a pod can access, offering the added benefit of data persistence even when individual containers are restarted.

Volumes can be categorized into several types:

- Node-local ephemeral volumes, like

emptyDir - Generic networked volumes, including

nfsandcephfs - Cloud provider-specific volumes, such as

AWS EBSorAWS EFS - Special-purpose volumes, for instance,

secretorconfigMap

The selection of a volume type is guided by your specific requirements. For instance, emptyDir is suitable for transient storage needs, whereas for scenarios requiring data persistence beyond node failures, opting for more durable options or cloud provider-specific volumes would be advisable.

Exchanging Data Between Containers via a Local Volume

Use Case

You need to facilitate data exchange between two or more containers running in the same pod through filesystem operations.

Solution

An emptyDir volume type is ideal for this purpose. This type of local volume allows containers within the same pod to share data via a common directory that is mounted at specified locations within each container.

Consider the following example of a pod manifest named exchangedata.yaml. This pod includes two containers, c1 and c2, both of which mount the emptyDir volume named xchange at different points in their file systems:

apiVersion: v1

kind: Pod

metadata:

name: sharevol

spec:

containers:

- name: c1

image: ubuntu:20.04

command:

- "bin/bash"

- "-c"

- "sleep 10000"

volumeMounts:

- name: xchange

mountPath: "/tmp/xchange"

- name: c2

image: ubuntu:20.04

command:

- "bin/bash"

- "-c"

- "sleep 10000"

volumeMounts:

- name: xchange

mountPath: "/tmp/data"

volumes:

- name: xchange

emptyDir: {}

To use this setup, apply the manifest and then interact with the volume through shell commands to write and read data across containers:

kubectl apply -f exchangedata.yaml

# Creates the pod with shared volume

kubectl exec sharevol -c c1 -i -t -- bash

# Inside c1 container

echo 'some data' > /tmp/xchange/data

exit

kubectl exec sharevol -c c2 -i -t -- bash

# Inside c2 container

cat /tmp/data/data

exit

Discussion

Local volumes like emptyDir are stored on the same node where the pod is running. Therefore, if the node fails or undergoes maintenance, any data stored in emptyDir will be lost.

While emptyDir volumes are suitable for temporary data and scratch space, for scenarios requiring data persistence across node failures, you should consider using Persistent Volumes (PVs) or networked storage solutions. These alternatives provide a more resilient storage option, ensuring data durability and availability across node maintenance and failures.

See Also

- Kubernetes documentation on volumes

Passing an API Access Key to a Pod Using a Secret

Use Case

As an administrator, you want to securely pass an API access key to your developers for accessing an external service, without exposing the key in plaintext within your Kubernetes manifests.

Solution

Utilize a Kubernetes Secret to securely store and pass the access key.

Steps:

- Create a Passphrase File: First, save the passphrase “open sesame” to a file named

passphrase:

echo -n "open sesame" > ./passphrase

- Create a Secret from the File: Next, create a Kubernetes secret named

ppfrom the passphrase file:

kubectl create secret generic pp --from-file=./passphrase

- Describe the Secret: (Optional) To verify the secret’s creation and see its metadata:

kubectl describe secrets/pp

Using the Secret in a Pod:

As a developer, to use the secret within a pod:

- Create a Pod Manifest: Define a pod that mounts the secret as a volume. For instance, save the following manifest as

ppconsumer.yaml:

apiVersion: v1

kind: Pod

metadata:

name: ppconsumer

spec:

containers:

- name: shell

image: busybox:1.36

command:

- "sh"

- "-c"

- "mount | grep access && sleep 3600"

volumeMounts:

- name: passphrase

mountPath: "/tmp/access"

readOnly: true

volumes:

- name: passphrase

secret:

secretName: pp

- Launch the Pod and Check the Logs:

kubectl apply -f ppconsumer.yaml

kubectl logs ppconsumer

- Access the Passphrase: To read the passphrase from within the container:

kubectl exec ppconsumer -i -t -- sh

cat /tmp/access/passphrase

exit

Discussion

Secrets are namespace-specific, so their setup and usage must consider the namespace context. They can be consumed in pods through:

- Volumes: Mounted at a specific path in the container, as demonstrated.

- Environment Variables: Injected into the container’s environment.

Secrets are limited to 1 MiB in size and are stored in tmpfs volumes that are memory-backed, ensuring that they are not written to disk.

Tips

- Secret Types:

kubectl create secretsupports different types of secrets, includingdocker-registryfor Docker registries,genericfor creating secrets from files or literals, andtlsfor SSL certificates. - Viewing Secret Content: The

kubectlcommand line tool does not display secret contents in plaintext to avoid security risks. However, you can manually decode a base64-encoded secret using command-line tools.

Encrypting Secrets at Rest

Kubernetes allows encrypting secrets at rest using the --encryption-provider-config option with the kube-apiserver, enhancing the security of sensitive data.

See Also

- Kubernetes documentation on secrets

- Kubernetes documentation on encrypting secrets at rest

Providing Configuration Data to an Application

Use Case

You need a method to provide configuration data to your application without embedding it in the container image or explicitly stating it within the pod’s specifications.

Solution

A ConfigMap is a Kubernetes resource designed to store non-confidential data in key-value pairs. It can be used to inject configuration settings into your application, either as environment variables or as configuration files mounted inside the pod.

For instance, to create a ConfigMap named nginxconfig with the key nginxgreeting and the value "hello from nginx", you would use the following command:

kubectl create configmap nginxconfig --from-literal=nginxgreeting="hello from nginx"

Using ConfigMap in a Pod:

To utilize the created ConfigMap in a deployment, you can reference it in your pod manifest. Below is an example of a pod specification that uses the ConfigMap to set an environment variable within an nginx container:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.25.2

env:

- name: NGINX_GREETING

valueFrom:

configMapKeyRef:

name: nginxconfig

key: nginxgreeting

Save this YAML as nginxpod.yaml and create the pod with:

kubectl apply -f nginxpod.yaml

To check the container’s environment variables, including the one set by the ConfigMap:

kubectl exec nginx -- printenv

Configuring as a File:

ConfigMaps can also be mounted as files inside a pod. For example, if you have a configuration file example.cfg:

debug: true

home: ~/abc

Create a ConfigMap with this file:

kubectl create configmap configfile --from-file=example.cfg

To mount this ConfigMap as a volume inside a pod, here’s an example manifest for a pod named teckbootcamps:

apiVersion: v1teckbootcamps

kind: Pod

metadata:

name: teckbootcamps

spec:

containers:

- image: busybox:1.36

command:

- sleep

- "3600"

volumeMounts:

- mountPath: /teckbootcamps

name:teckbootcamps

name: busybox

volumes:

- name:

configMap:

name: configfile

After creating this pod, you can verify the presence of example.cfg inside it:

kubectl exec -titeckbootcamps-- ls -lteckbootcampsteckbootcamps

kubectl exec -ti-- catteckbootcamps/example.cfg

Discussion

ConfigMaps are an efficient way to inject configuration data into pods, enabling applications to be more dynamic and flexible with their configurations. They support both environment variables and volume-mounted files, providing versatility in how configurations are applied.

See Also

- Kubernetes documentation on Configuring a Pod to Use a ConfigMap, for more detailed information on how to use ConfigMaps effectively in your Kubernetes deployments.

Using a Kubernetes Persistent Volume with Minikube

Use Case

You want to ensure that the data used by your container persists beyond pod restarts, avoiding data loss.

Solution

In a Minikube environment, you can achieve persistence by using a Persistent Volume (PV) of type hostPath, which mounts a directory from the host node into the pod, acting as persistent storage.

Steps:

- Define the Persistent Volume: Create a manifest file named

hostpath-pv.yamlfor a PV namedhostpathpv:

apiVersion: v1

kind: PersistentVolume

metadata:

name: hostpathpv

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/tmp/pvdata"

- Prepare the Directory on Minikube Node: Use Minikube to SSH into the node and prepare the

/tmp/pvdatadirectory:

minikube ssh

mkdir /tmp/pvdata && \

echo 'I am content served from a delicious persistent volume' > /tmp/pvdata/index.html

exit

- Create the Persistent Volume: Apply the manifest to create the PV:

kubectl apply -f hostpath-pv.yaml

- Define a Persistent Volume Claim (PVC): Create a PVC request via

pvc.yamlmanifest, asking for 200MB of storage:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 200Mi

- Launch the PVC: Apply the PVC manifest:

kubectl apply -f pvc.yaml

- Use the PVC in a Deployment: Create a deployment that uses the PVC for persistent storage in

nginx-using-pv.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-with-pv

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: webserver

image: nginx:1.25.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: webservercontent

volumes:

- name: webservercontent

persistentVolumeClaim:

claimName: mypvc

- Launch the Deployment:

kubectl apply -f nginx-using-pv.yaml

Verification

You can verify the presence of your data by creating a service and accessing it via an Ingress object, or simply by executing into the pod and checking the mounted volume’s contents.

Discussion

While the hostPath type PV works well for local development with Minikube, it’s not suited for production environments. In production, you should consider using networked storage solutions like NFS or cloud storage services such as AWS EBS to ensure data persistence and resilience against node failures.

Notes

- PVs are non-namespaced, cluster-wide resources.

- PVCs are namespaced and can claim PVs within the same namespace.

See Also

- Kubernetes documentation on Persistent Volumes

- “Configure a Pod to Use a PersistentVolume for Storage” in the Kubernetes documentation for more detailed guidance on setting up and using PVs and PVCs.

Understanding Data Persistency on Minikube

Use Case

You want to deploy a stateful application, such as a MySQL database, on Minikube and ensure that the data persists even if the hosting pod restarts.

Solution

Leverage a PersistentVolumeClaim (PVC) to request storage that persists beyond the lifecycle of individual pods. This approach provides a persistent storage solution for stateful applications on Minikube.

Steps:

- Create a Persistent Volume Claim: Define a PVC to request 1GB of storage with

data.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

- Apply the PVC: Create the PVC on Minikube, which automatically provisions a PersistentVolume (PV) to satisfy the claim:

kubectl apply -f data.yaml

- Verify the PVC and PV: Check the status of your PVC and the automatically created PV:

kubectl get pvc

kubectl get pv

- Use the PVC in Your Application: Mount the claimed persistent volume in your application pod. For a MySQL database, the volume should be mounted at

/var/lib/mysql. Here’s an example pod definition:

apiVersion: v1

kind: Pod

metadata:

name: db

spec:

containers:

- image: mysql:8.1.0

name: db

volumeMounts:

- mountPath: /var/lib/mysql

name: data

env:

- name: MYSQL_ROOT_PASSWORD

value: root

volumes:

- name: data

persistentVolumeClaim:

claimName: data

Discussion

Minikube comes with a default storage class that supports dynamic provisioning of persistent volumes using the hostPath provisioner. This setup simulates network-attached storage within the Minikube environment, making it suitable for development and testing of stateful applications.

When you create a PVC like the one above, Minikube’s storage provisioner dynamically creates a hostPath PV that points to a directory on the Minikube VM. This ensures that data written by your application persists across pod restarts, residing on the Minikube node itself.

Verification

To verify data persistence:

- SSH into Minikube:

minikube ssh

- List the files in the provisioned directory to see your database files:

ls -l /tmp/hostpath-provisioner/default/data

Note

While hostPath volumes offer a straightforward way to test persistent storage in Minikube, they are not suitable for production environments. Production applications should use more robust storage solutions like cloud provider volumes (e.g., AWS EBS, GCP Persistent Disk) or network storage (e.g., NFS, iSCSI).

See Also

Storing Encrypted Secrets in Version Control

Use Case

You want to include Kubernetes secret manifests in version control safely, even in public repositories, without exposing sensitive data.

Solution

Utilize sealed-secrets, a Kubernetes controller that enables the encryption of Secret objects into SealedSecrets. These encrypted objects can be safely stored and shared in version control. When applied to a cluster running the sealed-secrets controller, they are decrypted into regular Kubernetes Secret objects.

Steps

Install Sealed-Secrets Controller: Install the v0.23.1 release of the sealed-secrets controller in your cluster:kubectl apply -f https://github.com/bitnami-labs/sealed-secrets/releases/download/v0.23.1/controller.yaml

$ kubectl apply -f https://github.com/bitnami-labs/sealed-secrets/ releases/download/v0.23.1/controller.yaml

Verify Installation: Check the custom resource and controller pod:

$ kubectl get customresourcedefinitions

NAME CREATED AT

sealedsecrets.bitnami.com 2024-01-18T09:23:33Z

$ kubectl get pods -n kube-system -l name=sealed-secrets-controller

NAME READY STATUS RESTARTS AGE

sealed-secrets-controller-7ff6f47d47-dd76s 1/1 Running 0 2m22sDownload and Install Kubeseal: Download the kubeseal binary for your platform, which is used to encrypt secrets :

$ wget https://github.com/bitnami-labs/sealed-secrets/releases/download/

v0.23.1/kubeseal-0.23.1-darwin-amd64.tar.gz

$ tar xf kubeseal-0.23.1-darwin-amd64.tar.gz

$ sudo install -m 755 kubeseal /usr/local/bin/kubeseal

$ kubeseal --version

kubeseal version: 0.23.1Generate a Secret Manifest: Create a generic secret manifest in JSON format:

$ kubectl create secret generic teckbootcamps --from-literal=password=root -o json \

--dry-run=client > secret.json

$ cat secret.json

{

"kind": "Secret",

"apiVersion": "v1",

"metadata": {

"name": "teckbootcamps",

"creationTimestamp": null

},

"data": {

"password": "cm9vdA=="

}

}Encrypt the Secret with Kubeseal: Use kubeseal to encrypt the secret into a SealedSecret object:

$ kubeseal < secret.json > sealedsecret.json

$ cat sealedsecret.json

{

"kind": "SealedSecret",

"apiVersion": "bitnami.com/v1alpha1",

"metadata": {

"name": "teckbootcamps",

"namespace": "default",

"creationTimestamp": null

},

"spec": {

"template": {

"metadata": {

"name": "teckbootcamps",

"namespace": "default",

"creationTimestamp": null

}

},

"encryptedData": {

"password": "AgCyN4kBwl/eLt7aaaCDDNlFDp5s93QaQZZ/mm5BJ6SK1WoKyZ45hz..."

}

}

}Apply the SealedSecret: Create the SealedSecret in your cluster:kubectl apply -f sealedsecret.json

$ kubectl apply -f sealedsecret.json

sealedsecret.bitnami.com/teckbootcamps createdThe SealedSecret can now be committed to version control safely.

Discussion

The sealed-secrets controller decrypts SealedSecrets only within the cluster, converting them into regular Secret objects. This process ensures that sensitive data is encrypted before it reaches Kubernetes, protecting it even if the version control system is publicly accessible.

Remember, while SealedSecrets are safe to share, the resulting unsealed Secret objects in the cluster are only base64-encoded and need to be protected within the cluster using proper RBAC policies to restrict access.