Table of Contents Show

Introduction

When deploying a containerized application to a Kubernetes cluster, delays may occur due to the time it takes to pull the necessary container images from the registry. This latency can be particularly problematic in situations where applications need to scale out or process high-speed, real-time data. Fortunately, there are several tools and strategies that can improve the availability and caching of container images in Kubernetes. In this article, we will comprehensively introduce these tools and strategies, including kube-fledged, kuik, Kubernetes’ built-in image caching function , local caching, and monitoring and cleaning up unused images.

Kuberenetes Caching

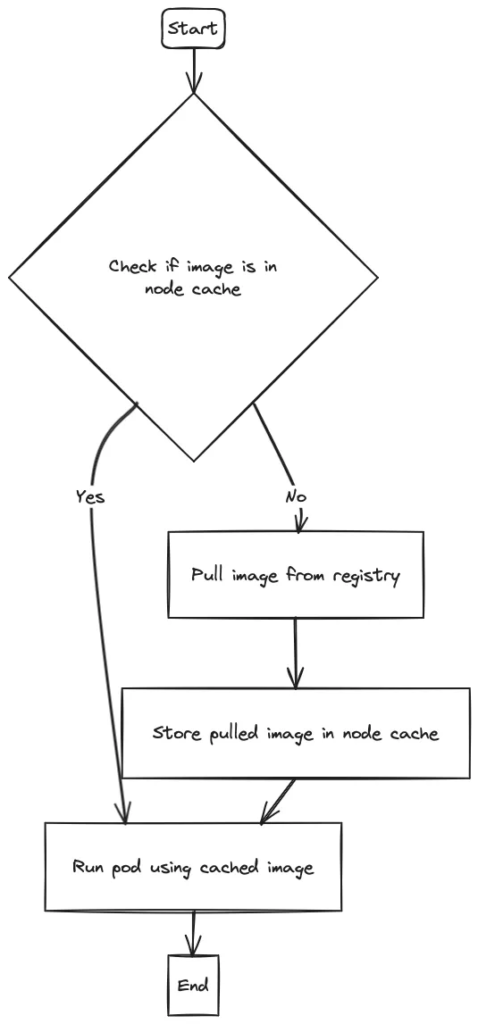

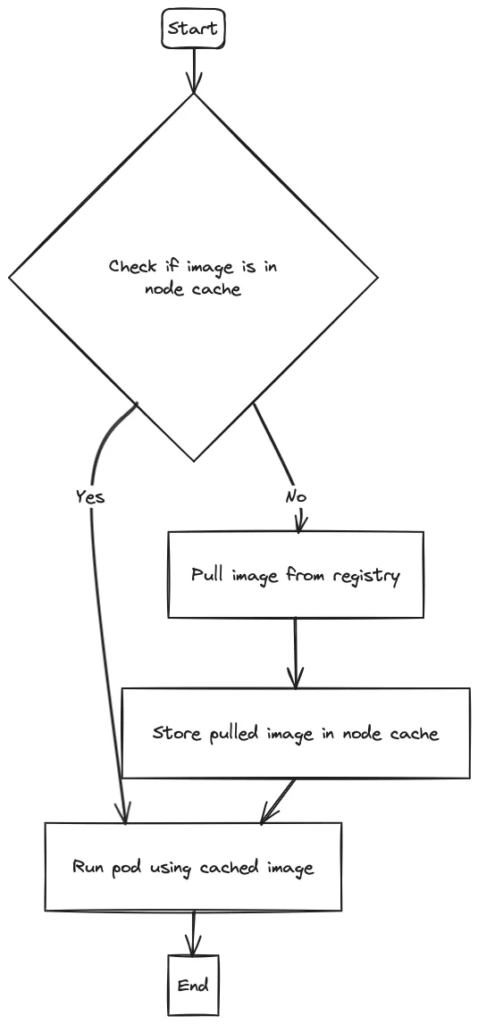

When a workload is deployed to Kubernetes, the containers in a Pod are naturally based on OCI container images. These images can be pulled from a variety of private/public repositories. Kubernetes caches the image locally on each node that pulls the image so that other Pods can use the same image.

However, in most use cases, this is not enough. Today, most cloud Kubernetes clusters need to autoscale and dynamically allocate nodes based on customer usage . What if multiple nodes have to call the same image multiple times? If the image is heavy, it may take a few minutes. In the case of automatic scaling of the application, it takes a relatively long time.

Existing solutions

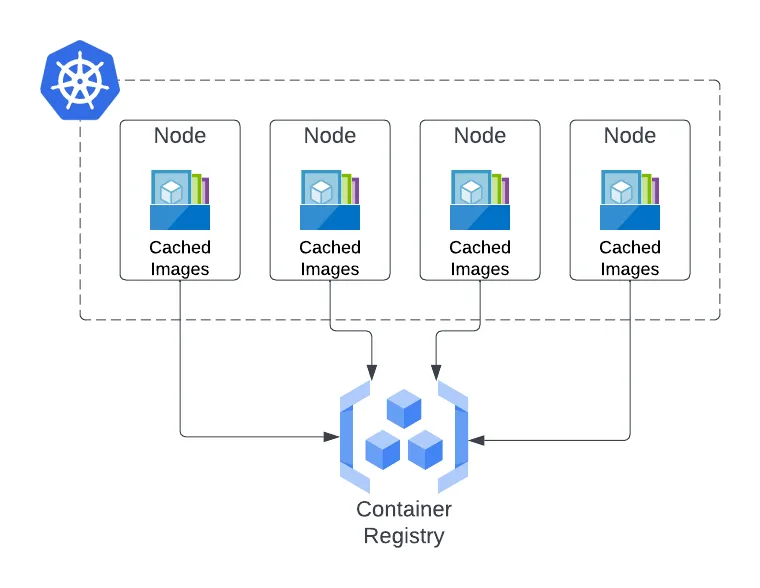

The intended solution requires building a caching layer on top of Kubernetes so that Kubernetes has a centralized image cache from which all nodes can “fetch” images. However, since the cache needs to be very fast, the caching solution needs to be inside Kubernetes and all nodes should reach the cache with the fastest latency.

To solve the latency problem of pulling container images from the registry, a widely used method is to run the registry image within the cluster.

Two widely used solutions are in-cluster self-hosted registries and push-through caches .

In the former solution, the local registry runs within the Kubernetes cluster and is configured as a mirror registry when the container is running. Any image pull requests will be directed to the registry within the cluster. In the latter solution, the cache of container images is built and managed directly on the worker nodes.

Other existing solutions include using reliable caching solutions like kuik, enabling image caching in Kubernetes, using local caching, optimizing container image builds, and monitoring and cleaning up unused images.

Harbor

Harbor is a CNCF graduation project. Its function is a container registry, but the most important thing is that it is a push proxy cache (Pull Through Proxy Cache) .

Push proxy cache is a caching mechanism designed to optimize the distribution and retrieval of container images in a container registry environment . It acts as an intermediary between the user side (such as a container runtime or build system) and the upstream container registry.

When a client requests a container image, the pass-through proxy cache checks to see if it already has a local copy of the requested image. If the image exists, the proxy cache will serve it directly to the client without downloading it from the upstream registry. This reduces network latency and saves bandwidth.

If the requested image is not available in the local cache, the proxy cache will act as a normal proxy and forward the request to the upstream registry. The proxy cache then retrieves the image from the registry and serves it to the client. Additionally, the proxy cache stores a copy of the image in its local cache for future requests .

kube-fledged

kube-fledged is a K8s add-on or operator for creating and managing container image caches directly on worker nodes in a Kubernetes cluster . It allows users to define a list of images and which worker nodes to cache these images on. kube-fledged provides a CRUD API to manage the life cycle of the image cache and supports multiple configurable parameters to customize functions according to personal needs.

kube-fledged is a general-purpose solution designed and built for managing image caches in Kubernetes . While the primary use case is to enable quick startup and scaling of Pods, the solution supports various use cases listed below.

working principle

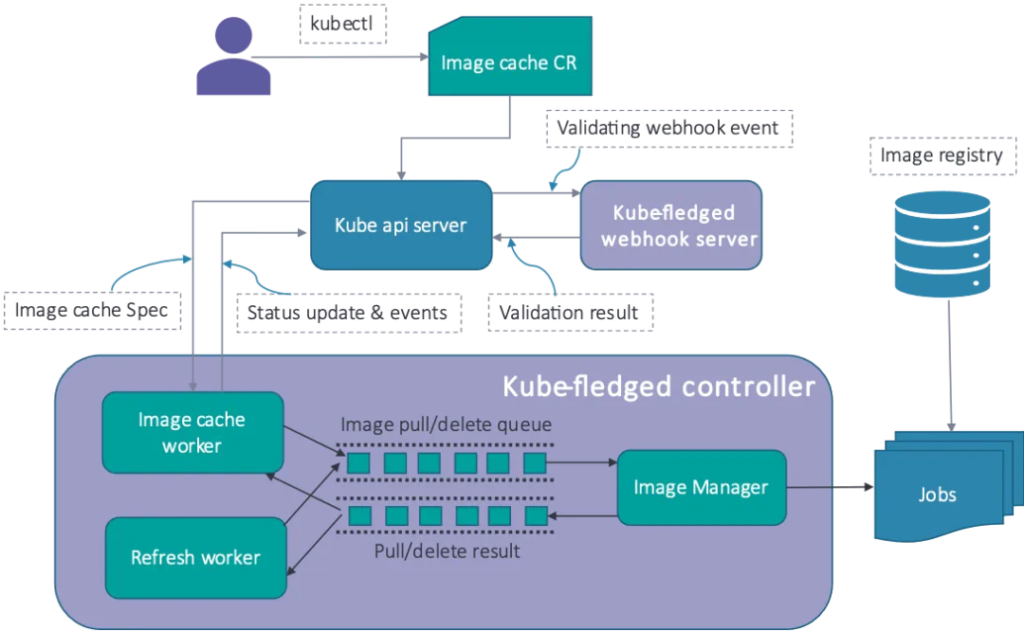

kube-fledged defines a custom resource called ” ImageCache ” and implements a custom controller (called kubefledged-controller). Users can use the kubectl command to create and delete ImageCache resources .

Kubernetes-image-puller

To cache the image, the Kubernetes Image Puller creates a Daemonset on the desired cluster and then creates a pod on each node in the cluster with a list of containers with the command sleep 720h. This ensures that all nodes in the cluster have these images cached. The sleep binary used is based on golang (see Scratch Images: https://github.com/che-incubator/kubernetes-image-puller#scratch-images).

We also periodically check the health of the daemon set and recreate it if necessary.

Applications can be deployed through Helm or by processing and applying OpenShift templates. Additionally, there is a community-supported Operator on OperatorHub.

kubernetes-image-puller deploys a large number of containers (one container for each image and each node, and the caching mechanism uses daemonset) to implement the caching function.For example: there are 5 nodes and 10 images in the cache, and we already have 50 containers in the cluster dedicated to the cache function.

Tugger

Tugger uses a single configuration file, defined by its Helm file values. It does not allow us to separate “system” configuration (eg: exclude specific images from the cache system) and “user” configuration.

Tugger uses a single configuration file defined through Helm file values. It does not allow separation of “system” configuration, such as excluding specific images from the cache system, and “user” configuration.

kube-image-keeper (kuik)

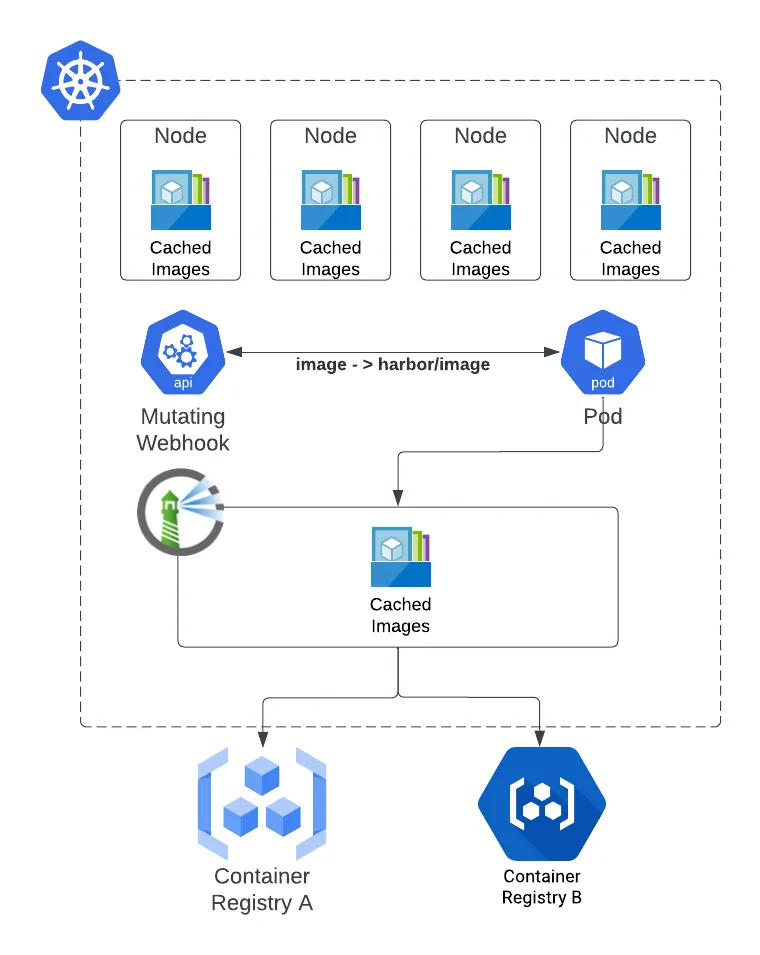

kube-image-keeper (aka kuik, similar to “quick”) is the container image caching system for Kubernetes . It can save the container images used by pods in its own local registry, so that these images can still be used when the original images are unavailable.

working principle

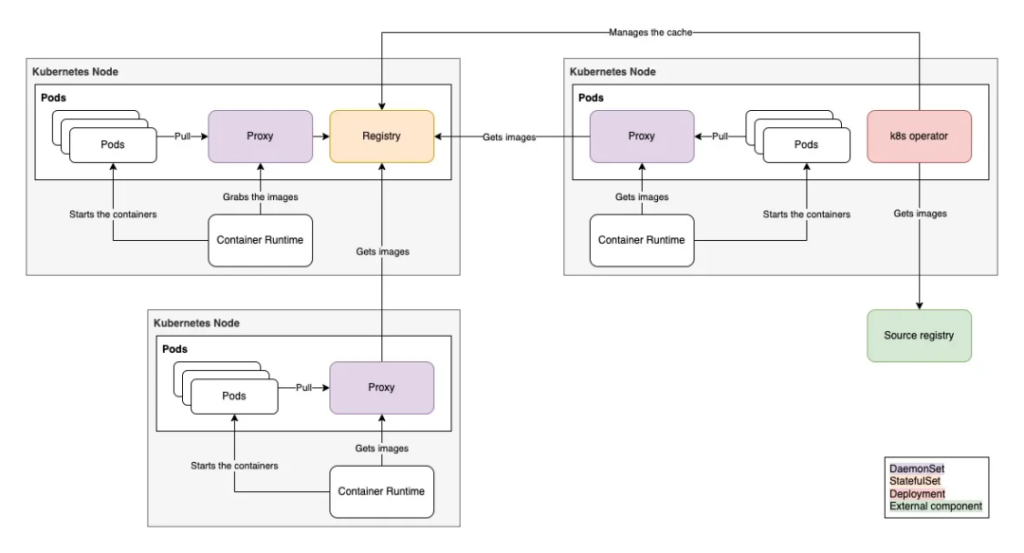

When a pod is created, kuik’s webhook will immediately rewrite its image and add localhost:{port}/a prefix (default portis 7439, configurable).

There is an image proxy on localhost:{port}, which serves images from kuik’s cache registry (when the image has been cached) or directly from the original registry (when the image has not been cached).

The controller is responsible for monitoring pods and when new images are discovered, CachedImagecustom resources are created for these images. Another controller monitors these CachedImagecustom resources and copies the image from the source registry to kuik’s cache registry accordingly.

Architecture and components

In the kuik namespace you can find:

- Running the kuik controller

Deployment - Running kuik mirror agent

DaemonSet - When the component is running in HA mode,

StatefulSetkuik’s image cache is run using , insteadDeployment.

Running the image cache obviously requires a certain amount of disk space (please refer to Garbage collection and limitations: https://github.com/enix/kube-image-keeper#garbage-collection-and-limitations). Beyond that, the kuik component is quite lightweight in terms of computing resources. This shows CPU and RAM usage under default settings, with both controllers in HA mode:

$ kubectl top pods

NAME CPU(cores) MEMORY(bytes)

kube-image-keeper-0 1m 86Mi

kube-image-keeper-controllers-5b5cc9fcc6-bv6cp 1m 16Mi

kube-image-keeper-controllers-5b5cc9fcc6-tjl7t 3m 24Mi

kube-image-keeper-proxy-54lzk 1m 19Mi

Warm-image

WarmImageCRD takes the image reference and prefetches it onto every node in the cluster.

To install this custom resource in your cluster, just run:

# Install the CRD and Controller.

curl https://raw.githubusercontent.com/mattmoor/warm-image/master/release.yaml \

| kubectl create -f -

Alternatively, you can git clonerepository and run:

# Install the CRD and Controller.

kubectl create -f release.yaml

Conclusion

In this article, we show you how to speed up pod startup by caching images on nodes. By prefetching container images on the worker nodes of your Kubernetes cluster, you can significantly reduce Pod startup time, even for large images, down to a few seconds. This technology can benefit customers running workloads such as machine learning, simulation, data analysis and code building, improving container launch performance and overall workload efficiency.

With no additional infrastructure or Kubernetes resources to manage, this approach provides a cost-effective solution to the problem of slow container startup in Kubernetes-based environments.